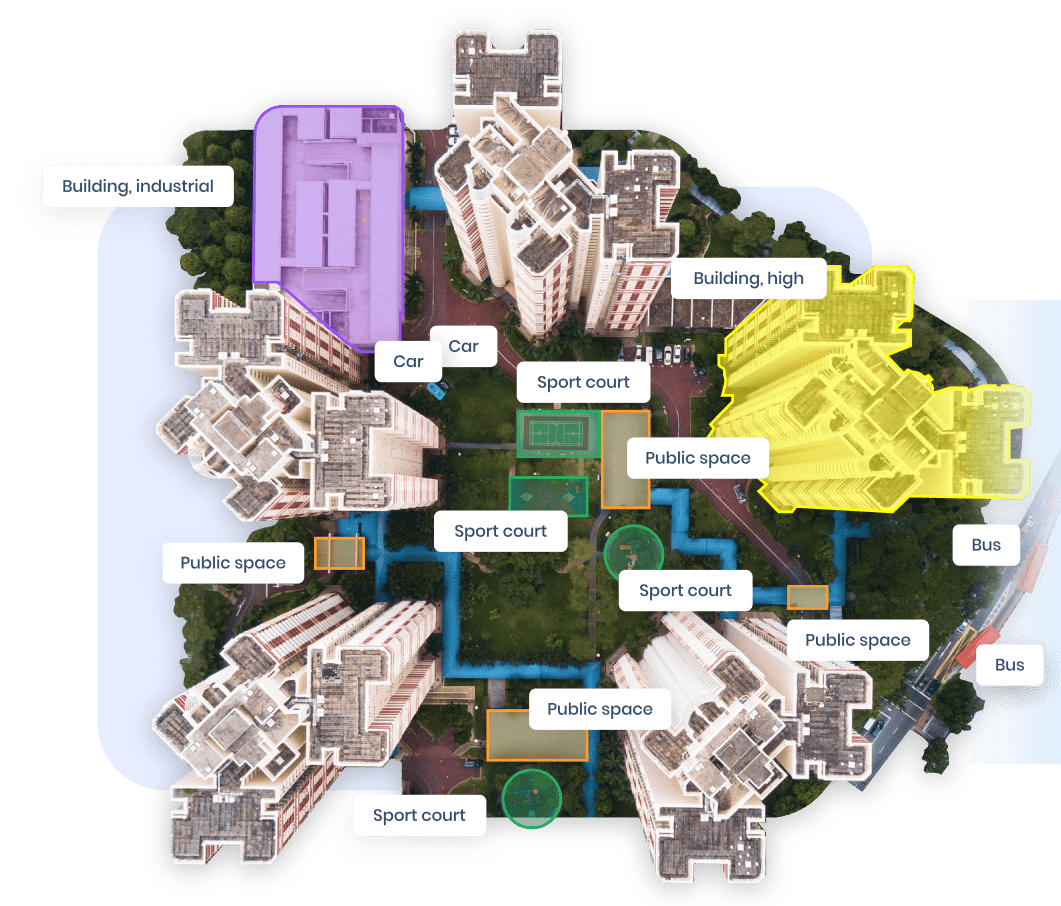

Smart city computer vision refers to the use of AI models that can interpret video and imagery captured in urban environments. These systems recognize vehicles, pedestrians, bicycles, traffic signs, crowd density, unusual behaviors, and safety incidents. Cities deploy computer vision to reduce congestion, enhance public safety, and manage transportation networks more efficiently. As more municipalities explore data driven urban management, computer vision is becoming one of the foundations of smart city infrastructure.

The technology has expanded rapidly due to advances in deep learning, increased availability of public camera networks, and the rise of edge computing hardware. Research from the Urban Computing Foundation highlights how computer vision is transforming urban operations by generating consistent, real time visibility into traffic and public spaces (https://www.urban-computing.org). This shift enables governments and private operators to make informed decisions supported by objective spatial data.

Computer vision adds a dynamic, event driven layer to traditional urban planning tools. Instead of relying solely on static datasets, smart cities can monitor activities as they occur, enabling faster and more adaptive responses.

Why Computer Vision Matters for Smart Cities

Enhancing traffic efficiency

Urban traffic is complex and constantly changing. Computer vision helps operators detect congestion patterns, optimize traffic light timing, and identify bottlenecks. The ability to measure vehicle flow enhances long term transportation planning and reduces commute times.

Improving road safety

Vision systems detect dangerous driving behavior, pedestrian near misses, and traffic violations. These insights help authorities redesign hazardous intersections, improve signage, and prioritize enforcement in high risk areas.

Supporting public safety

Cities use computer vision to detect fights, vandalism, abandoned objects, and other anomalies. These systems help reduce crime and speed up emergency response. Vision acts as an early warning system by identifying unusual activity in public spaces.

Understanding pedestrian movement

Pedestrian density and flow patterns are essential for designing walkable cities. Computer vision enables accurate crowd analytics while also informing infrastructure upgrades, tourism planning, and accessibility improvements.

Reducing operational costs

Automated monitoring helps cities scale their operational reach without proportionally increasing personnel. Vision based automation supports 24 hour oversight across large geographic areas.

Computer vision gives cities a real time understanding of how people and vehicles interact with urban environments.

How Smart City Computer Vision Works

Step 1: Video capture

Smart cities rely on fixed cameras, traffic cameras, public transit cameras, and private video systems integrated through partnerships. These cameras capture continuous feeds covering intersections, roads, public squares, and transportation hubs.

Step 2: Pre processing

Video frames undergo stabilization, noise reduction, and color normalization. Pre processing prepares data for the detection models and helps mitigate issues such as nighttime lighting or weather related distortion.

Step 3: Object detection

Models identify vehicles, pedestrians, cyclists, and other objects. Modern detectors such as YOLO variants, EfficientDet, and transformer based architectures offer high accuracy and speed. Detection is the foundation for most downstream analytics.

Step 4: Object tracking

Tracking models follow objects across frames, maintaining consistent identity over time. This enables measurement of vehicle trajectories, pedestrian paths, and traffic flow patterns. Tracking provides temporal context necessary for deeper analysis.

Step 5: Event recognition

Once objects are tracked, event recognition models analyze interactions. They detect collisions, near misses, illegal crossings, lane violations, crowd surges, and abnormal behaviors. Event detection is essential for safety systems.

Step 6: Data aggregation and visualization

Insights feed into dashboards, mobility platforms, and city operations centers. Visualization tools allow operators to monitor city conditions, generate alerts, and evaluate long term patterns.

This pipeline blends detection, tracking, and event modeling to create a comprehensive picture of urban dynamics.

Vehicle Tracking and Traffic AI

Vehicle tracking is one of the most common smart city use cases. Cities use tracking to understand mobility patterns, improve traffic flow, and reduce congestion.

Flow analysis

Tracking models measure vehicle volume, speed, and trajectory. This helps operators detect bottlenecks, adjust timing at intersections, and manage peak hour traffic more effectively.

Incident detection

Sudden stops, lane changes, or collisions are detected using trajectory anomalies. Fast identification of incidents helps reduce secondary accidents and accelerates emergency response.

Signal optimization

Traffic light algorithms use tracking data to adjust timing in real time. Adaptive signal control can significantly reduce delays and emissions.

Urban planning

Traffic flow data helps urban planners redesign streets, allocate bus lanes, or evaluate the impact of new development.

Work from the Transportation Research Board shows that computer vision based traffic models significantly improve accuracy relative to manual counts or sensor based methods (https://www.trb.org).

Vehicle tracking datasets are crucial for training robust traffic AI systems. These datasets include labeled vehicles, trajectories, and intersection layouts.

Pedestrian Detection and Safety Systems

Pedestrian detection is essential for building safer, more walkable cities. Smart city systems monitor crosswalks, sidewalks, and public plazas to identify dangerous interactions and improve street design.

Crosswalk monitoring

Vision systems detect pedestrians entering crosswalks and compare trajectories with approaching vehicles. Near misses can be quantified to identify high risk intersections.

Sidewalk congestion analysis

Pedestrian density maps help operators identify areas with heavy foot traffic. These insights support infrastructure upgrades, accessibility improvements, and event planning.

Nighttime safety

Pedestrian detection helps identify poorly lit areas where visibility is compromised. Cities can use this data to improve lighting and reduce hazards.

Behavior analysis

Models detect unsafe behavior such as pedestrians crossing outside designated areas. This information informs targeted education and enforcement programs.

Pedestrian detection datasets require diverse annotation across lighting conditions, clothing types, and weather scenarios to ensure robust model performance.

Anomaly Detection and Public Safety AI

Anomaly detection is a strategic component of smart city safety systems. These models identify events that deviate from expected behavior, enabling early intervention.

Violence detection

Physical altercations, vandalism, or aggressive behavior can be recognized automatically. Vision models detect unusual movement patterns, abrupt actions, or clusters of activity.

Abandoned object detection

Objects left unattended in public spaces may pose safety risks. Detection models help identify and flag these situations quickly.

Behavioral anomalies

Sudden crowd dispersal, erratic movement, or suspicious loitering can indicate safety concerns. Models analyze temporal patterns to detect unusual behavior.

Real-time alerting

Anomaly detection systems integrate with emergency response dispatchers. Fast alerts improve situational awareness and help prevent escalation.

The Global City Indicators Foundation emphasizes the importance of automated event detection in strengthening resilience and reducing response times (https://www.cityindicators.org).

Anomaly detection datasets must include diverse scenarios to avoid false positives and ensure reliable performance across different environments.

License Plate Detection and Recognition (LPR)

License plate recognition is one of the highest value smart city applications because it directly supports enforcement, mobility management, and revenue collection.

Traffic enforcement

LPR detects speeding, illegal turns, and red light violations. Automated enforcement reduces manual workloads and improves compliance.

Parking systems

Parking operators use LPR to automate access control, payment validation, and occupancy monitoring. This streamlines operations and reduces congestion in urban centers.

Tolling and road pricing

LPR supports automated toll collection and congestion pricing systems. These systems improve efficiency and reduce the need for physical toll booths.

Stolen vehicle recovery

Law enforcement agencies use LPR networks to identify stolen vehicles. Automated alerts support rapid intervention.

Border and access control

LPR facilitates access management for restricted zones, ports, and industrial areas.

LPR datasets typically include vehicle images, plate crops, bounding boxes, and text labels. High quality annotation is required for both detection and optical character recognition tasks.

Crowd Counting and Urban Density Monitoring

Computer vision provides cities with detailed insights into crowd density, movement patterns, and spatial distribution. This supports public safety, urban planning, and event management.

Event monitoring

Concerts, festivals, and sporting events require real time crowd counting and monitoring. Vision systems detect surges, bottlenecks, and potential risks.

Transit station analysis

Public transit hubs rely on crowd analytics to manage passenger flow and reduce congestion during peak hours.

Tourism analytics

Cities use crowd density metrics to understand how people move through tourist districts. This helps optimize signage, amenities, and mobility options.

Retail and commercial insights

Crowd analysis helps business districts model foot traffic trends and evaluate economic activity.

Academic work from the Center for Urban Science and Progress (CUSP) demonstrates how density maps derived from computer vision improve safety planning in crowded areas (https://cusp.nyu.edu).

Crowd counting datasets include annotated head points, density maps, and flow labels.

Datasets for Smart City AI

Vehicle tracking datasets

These datasets include tracking sequences with labeled trajectories. They train models to predict movement, detect incidents, and analyze traffic flow.

Pedestrian detection datasets

These datasets capture diverse pedestrian environments across lighting, occlusion, and clothing variations. They train safety and mobility models.

Anomaly detection datasets

They include unusual behaviors, fights, abandoned objects, and crowd anomalies. Creating them requires careful scenario selection and annotation.

License plate recognition datasets

These datasets include vehicle images, plate crops, bounding boxes, and text annotations. They support detection and OCR tasks.

Crowd counting datasets

These datasets include annotated density maps and head point labels. They help train models for urban density estimation.

Smart city datasets require extremely high quality annotation due to the complexity of urban scenes and the importance of model reliability in safety critical applications.

Annotation for Smart City Computer Vision

Bounding box annotation

Used for detecting vehicles, pedestrians, traffic signs, and license plates. Bounding boxes must be consistent across varying camera angles and lighting conditions.

Instance segmentation

Segmentation masks provide detailed shapes for vehicles, pedestrians, and objects. This supports precise tracking and distance estimation.

Trajectory annotation

Trajectory labels show how objects move across frames. They are essential for tracking models and for analyzing traffic flow.

Event labeling

Annotators label safety events such as near misses, collisions, and anomalies. Clear definitions are required to avoid ambiguity.

OCR annotation for LPR

Plate text must be precisely transcribed. Accuracy is critical, especially in multilingual regions or where plates use special characters.

Annotation complexity makes quality control essential. Errors in annotation can lead to unreliable models that fail in real world conditions.

Challenges in Smart City Computer Vision

Lighting variation

Day and night conditions vary significantly. Shadows, glare, and artificial lighting complicate detection tasks.

Occlusions

Vehicles and pedestrians frequently overlap. Models must handle partial visibility without losing track of objects.

Weather conditions

Rain, fog, snow, and dust reduce visibility. Models trained in clear conditions may fail during adverse weather unless datasets include diverse conditions.

Camera angle diversity

Cameras across a city may include overhead, angled, fisheye, and wide angle views. Models must generalize across these perspectives.

Privacy requirements

Smart city systems must comply with privacy regulations and avoid unnecessary collection of personally identifiable information.

Real time constraints

Many smart city applications require low latency processing. This puts pressure on model efficiency and hardware performance.

Building robust models requires careful dataset design, extensive testing, and strong data governance practices.

Future of Smart City Computer Vision

Edge AI processing

Processing video on the camera or at the edge reduces latency and bandwidth usage. Edge based systems are becoming standard for real time detection.

Multisensor fusion

Combining video with radar, LiDAR, and IoT sensors enriches urban intelligence. Multimodal systems outperform single sensor solutions.

Self supervised learning

Models will increasingly learn from unlabeled video streams, drastically reducing labeling costs.

Predictive analytics

Future city platforms will not only detect events but also forecast them. This enhances planning and risk mitigation.

Privacy preserving models

Techniques such as on device anonymization and federated learning will help balance safety with privacy.

City scale vision networks

Large integrated networks will combine hundreds of camera feeds into unified analytics frameworks. These systems support more coordinated decision making.

The evolution of smart city computer vision will rely on scalable data practices and advanced AI architectures.

Conclusion

Smart city computer vision has become a foundational technology for modern urban management. By interpreting video in real time, AI systems help cities optimize traffic flow, reduce accidents, monitor public safety, and understand pedestrian movement. These systems depend on well designed datasets, accurate annotation, and robust model architectures that can handle the complexity of urban environments. As cities continue to digitize their infrastructure, computer vision will play a central role in shaping safer, more efficient, and more sustainable metropolitan systems.

If your organization needs high quality datasets for traffic analytics, anomaly detection, LPR, or smart city video monitoring, DataVLab can help.

We design, annotate, and quality control complex computer vision datasets for urban AI.