Understanding the Role of Clinical Annotation in Medical AI

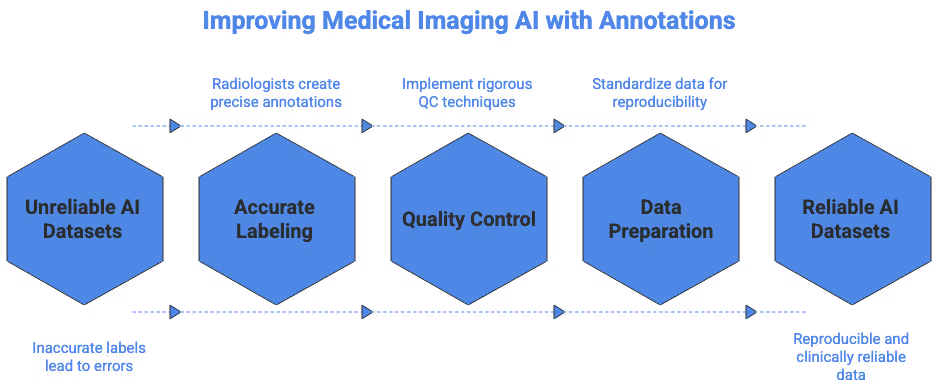

The first instance of clinical annotation marks the point where raw medical imaging becomes structured, interpretable data suitable for machine learning. Healthcare AI systems depend on annotated images to learn what tumors, lesions, vessels or tissue types look like across a wide range of patient cases. Without consistent labels created under clinically rigorous supervision, even advanced deep learning architectures struggle to generalize safely. This is why experienced radiologists, pathologists and annotation specialists remain essential in every imaging AI project. Their expertise ensures that anatomical boundaries, pathological findings and subtle imaging features are captured with the precision needed for diagnostic-grade AI. For teams building clinical-grade systems, strong annotation workflows are often more valuable than model hyperparameters.

Why High Fidelity Matters for CT, MRI and Pathology Data

CT, MRI and pathology modalities each present distinct challenges, and understanding those challenges is key for producing reproducible datasets. CT relies on Hounsfield units, windowing and cross-plane consistency, making it sensitive to small delineation errors around vascular structures or organ boundaries. MRI introduces variations across T1, T2, FLAIR and contrast sequences, requiring annotators to understand appearance differences caused by acquisition physics. Pathology introduces gigapixel-scale whole-slide images, staining variability and extremely fine morphological details at the cellular level. In each modality, clinical precision directly determines how well models can learn. Even small inconsistencies can propagate through training pipelines and create unreliable predictions when deployed in real hospitals.

Preparing Medical Imaging Data for Annotation

High-quality annotation always begins with correctly prepared imaging data. Before radiologists or annotators begin drawing masks or identifying findings, teams must ensure that the dataset is clean, anonymized and consistent. Preprocessing workflows typically include DICOM metadata checks, slice-ordering validation, field-of-view normalization and contrast harmonization where appropriate. Many AI projects fail because dataset preparation is rushed, leading to heterogeneous inputs that confuse both human annotators and downstream models. Clinical annotation teams must therefore begin by verifying that the dataset structure mirrors real clinical environments while remaining legally compliant and technically standardized.

DICOM Integrity and Metadata Normalization

DICOM files contain essential technical information such as slice thickness, acquisition parameters, and imaging orientation. Missing or inconsistent metadata often leads to incorrect 3D reconstructions or misaligned masks. Before annotation begins, teams must validate the consistency of study and series identifiers, check pixel spacing across slices, and confirm that reconstruction kernels are stable across the dataset. Institutions like the Radiological Society of North America provide reference materials on imaging standardization that help teams avoid errors during early data preparation.

PHI Removal and Data Privacy Controls

Because medical images contain protected health information, strict anonymization is required. Annotators should only receive data that has been cleansed of identifiers, including embedded burn-ins on CT slices or pathology slide labels in the corner of whole-slide images. Full audit trails and access controls help maintain privacy, and anonymization should be validated by someone other than the person who performed it. In hospital environments, data privacy requirements may be governed by regional regulations, but the clinical objective remains the same: provide annotators with high-quality images containing zero patient-specific identifiers.

CT Annotation: Technical Considerations and Workflow Nuances

CT annotation is often the backbone of medical AI systems designed for detection, segmentation or triage. The grayscale intensity values, relatively high resolution and cross-plane consistency make CT suitable for tasks such as organ segmentation, lung nodule identification or trauma assessment. However, CT’s dependence on windowing, contrast stages and consistent slice orientation requires clear annotation protocols. Annotators must understand clinical context so they can interpret density patterns and differentiate noise from pathology. CT datasets should also account for the fact that radiologists interpret images with interactive window adjustments, which should ideally be replicated or standardized before annotation.

Handling Windowing and Contrast Variability

Different CT tasks require different window settings, such as lung, soft tissue or bone windows. A density value that appears pathological in one window may appear benign in another. This means annotation guidelines must specify exactly how annotators should adjust windows during review. For example, lung nodule segmentation should occur using lung windows, while liver lesion annotation may require switching between multiple window presets. Annotators should also evaluate contrast phases consistently, especially for abdominal imaging. Multi-phase CT studies introduce arterial, portal venous and delayed phases, which influence the visibility of lesions and vascular structures.

Managing Slice Thickness and Reconstruction Kernels

CT slice thickness determines how much anatomical detail is preserved in the axial dimension. Models trained on mixtures of 1 mm, 3 mm and 5 mm slices often generalize poorly. Annotation should therefore occur on consistent reconstructions or on the highest resolution version available, even if clinical radiologists typically review multiple thicknesses. Reconstruction kernels such as soft, medium or bone kernels also affect texture and edge definition. These factors must be specified before annotation begins to ensure that the resulting labels reflect a standardized imaging environment.

Real-World Clinical Example: Liver Lesion Segmentation

Liver imaging is a common AI use case because of the complexity of lesion classification and segmentation. Liver lesions vary widely in shape, enhancement pattern and boundary clarity. Annotators must understand how lesions present across contrast phases and ensure that boundaries are drawn consistently even when the lesion edge appears faint. Tools like the Stanford Radiology teaching files offer useful CT references that help teams calibrate annotation decisions.

MRI Annotation: Challenges of Tissue Contrast, Sequences and Artifacts

MRI annotation introduces complexity that is fundamentally different from CT. The modality’s strengths in soft tissue contrast also create variability that requires deep clinical knowledge. T1, T2, FLAIR, DWI, ADC and contrast-enhanced sequences each present different visual characteristics. For annotation teams, the absence of standardized intensity scales means that segmentation MRI tasks require clear guidelines regarding sequence selection. Teams should avoid mixing sequences when the clinical objective depends heavily on tissue contrast. Annotators must also be trained to identify motion artifacts, susceptibility artifacts and noise patterns that can distort region boundaries.

Sequence Selection for Annotation Consistency

Some AI tasks require multi-sequence labeling, while others rely on a single sequence. For example, white matter lesion segmentation typically uses FLAIR, while tumor delineation may require T1 post contrast. Annotation guidelines should identify which sequences represent the ground truth for each structure or pathology. Without clear rules, annotators may misinterpret contrast behavior or overlook subtle features. MRI physics resources such as Radiopaedia’s MRI basics page are valuable for calibrating annotation teams.

Artifact Recognition and Its Impact on Label Quality

Artifacts in MRI can mimic pathology or distort shapes, leading to inconsistent annotation. Motion artifacts may create ghosting that appears as duplicated anatomy, while susceptibility artifacts can create signal dropouts near air or metal. Annotators should be trained to recognize these patterns so they do not treat them as anatomical boundaries. In tumor segmentation tasks, misinterpreting artifact signals can cause significant mask deviations, particularly near skull base regions or postoperative areas.

Real-World Clinical Example: Brain Tumor Segmentation

MRI brain tumor annotation is one of the most studied areas in medical AI. Tumors such as glioblastomas contain multiple subregions including enhancing core, non-enhancing areas and edema visible on FLAIR. These contrasts vary across sequences, and annotators must understand how each sequence contributes to accurate boundary delineation. Research communities such as MICCAI have produced benchmark challenges that illustrate best practices for tumor segmentation.

Pathology Annotation: Gigapixels, Morphology and Cell-Level Precision

Pathology annotation requires the highest spatial precision among all clinical imaging modalities. Whole-slide images may exceed 80,000 by 80,000 pixels, and annotators must interpret cellular morphology, tissue patterns and staining quality. Unlike CT and MRI, pathology images often come from multiple scanners and staining protocols, creating color and texture variation that models must learn to generalize across. Annotators must be trained in histological structures and boundaries because the differences between normal tissue and early pathological change can be subtle. These factors make pathology annotation both resource-intensive and clinically sensitive.

Stain Variability and Color Normalization

Different labs produce slides with varying stain saturation, hue and contrast. Hematoxylin and eosin stains, for example, may appear more purple or more pink depending on preparation. AI applications must learn across this variability, but annotation teams should still standardize slide appearance through color normalization. Clear guidelines ensure that annotators interpret morphology under consistent conditions. Resources from the University of Chicago Pathology Atlas provide reference examples of staining and tissue structures.

Boundary Identification at Cellular Scale

Pathology annotation often requires marking precise boundaries around nuclei, glands or tumor margins. Errors of even a few pixels can significantly affect model training when operating at this scale. Annotators should understand morphological cues such as nuclear pleomorphism, mitotic figures or glandular differentiation. They also need experience identifying preparation artifacts like tissue folding or uneven staining, which should not be included in pathologic regions.

Real-World Clinical Example: Tumor Margin Delineation

Tumor margin annotation is one of the most critical tasks in pathology AI. Annotators must determine where malignant tissue transitions to normal tissue, which may not always present a sharp boundary. They must review multiple magnification levels and consider structural patterns such as invasive fronts or irregular stromal changes. Pathology Outlines provides helpful morphological explanations that assist in training annotators on tumor behavior.

Building Annotation Protocols That Radiologists and Pathologists Trust

Clinical annotation guidelines must reflect true medical practice, not simplified assumptions made by AI teams. Good protocols explain not only what to label but also why specific structures are considered clinically meaningful. They establish definitions for ambiguous cases, provide examples of acceptable variations, and outline steps for decision making when findings are not clear. These guidelines also need to be validated by clinical experts who understand how the model will eventually be used in real patient care.

Establishing Ground Truth and Handling Ambiguous Cases

Most clinical datasets contain ambiguous or borderline findings. Annotation protocols need to specify how annotators should handle uncertain cases, whether by marking them as ambiguous, requesting expert review or excluding them. Image collections from open-access radiology or pathology sources help teams define these ambiguity categories scientifically. For pathology specifically, reference materials from Harvard’s Countway Library can improve consistency in morphological interpretations.

Ensuring Multi-Expert Consensus

For sensitive tasks such as tumor segmentation or multi-class organ delineation, a single annotator is rarely enough. Clinical consensus workflows often involve multiple radiologists or pathologists reviewing the same case. When disagreements arise, adjudication steps ensure that the final ground truth reflects expert consensus. These adjudication cycles produce more robust datasets and improve model performance in high-risk environments.

Quality Control Techniques in Clinical Annotation

Quality control is essential for preventing mislabeled samples from entering training pipelines. QC workflows include multiple rounds of review, blind validation sessions and statistical audits of annotator performance. Clinical QC teams also examine annotator drift over long projects, ensuring that performance does not decline as fatigue or familiarity increases. When QC identifies issues, feedback sessions help calibrate annotators and restore consistency. These steps are particularly important for segmentation tasks, where minor errors accumulate and degrade model reliability.

Double-Review and Performance Monitoring

Most medical imaging AI projects require at least two layers of review. The first review corrects obvious errors, while the second evaluates subtle inaccuracies in boundary definition. Metrics such as Dice similarity, Hausdorff distance and slice-level consistency are commonly used to evaluate segmentation quality. Reviewers should also examine error patterns to identify whether an annotator consistently under- or over-segments certain structures.

Continuous Calibration and Expert Checkpoints

Calibration sessions help align teams throughout long-term annotation projects. Annotators review challenging cases together and discuss how guidelines apply in ambiguous situations. Expert checkpoints ensure that annotated data stays aligned with clinical standards as new cases or pathology variations appear. Calibration also builds institutional knowledge, improving annotator performance across multiple medical imaging projects.

Common Pitfalls and How to Avoid Them

Even highly skilled teams encounter challenges when annotating complex clinical datasets. Common pitfalls include inconsistent segmentation boundaries, mixing incompatible sequences, annotating the wrong anatomical plane or misunderstanding pathology morphology. Poorly written guidelines or insufficient examples also lead to variation. By designing workflows that anticipate these problems, AI teams can create reliable datasets suitable for clinical deployment. Preventing errors upfront is far more efficient than repairing mislabeled data later.

Modality-Specific Mistakes and Their Consequences

CT errors may include mislabeling vessels as lesions due to windowing issues or misinterpreting beam-hardening artifacts as pathology. MRI errors frequently occur when annotators rely on the wrong sequence for a given structure. Pathology errors can involve over-segmentation caused by staining noise or under-segmentation caused by misinterpreting tissue architecture. Teams must regularly audit error logs to identify recurring issues and adjust guidelines accordingly.

Bringing It All Together: Real-World Examples of Effective Clinical Annotation

Successful clinical annotation projects often involve multidisciplinary collaboration. Radiologists, pathologists, data scientists and annotation specialists work together to define tasks, build guidelines and ensure high fidelity in the final dataset. These projects may involve segmenting brain tumors, mapping vascular anatomy, identifying lung nodules or delineating tumor margins in whole-slide images. The most successful teams adopt structured workflows, frequent calibration and strong clinical involvement. In every modality, the combination of expert knowledge and clear process design leads to reliable datasets capable of powering diagnostic-grade AI.

If You Are Working on a Medical Imaging Project

If you are working on an AI or medical imaging project, our team at DataVLab would be glad to support you. Whether you need segmentation, classification, pathology labeling or multi-modal dataset preparation, we can help you build clinically rigorous datasets with expert quality control.