Why Spectral Differences Matter in Satellite Imagery 🌐

Satellite imagery is not just about capturing what the human eye can see. While RGB images replicate visible light (red, green, blue), multispectral images can detect wavelengths beyond human vision—such as near-infrared (NIR), shortwave infrared (SWIR), and ultraviolet (UV).

Each of these bands carries unique information:

- RGB: Great for visual interpretation, road mapping, and basic object detection.

- NIR: Vital for assessing vegetation health, biomass, and water bodies.

- SWIR: Useful for detecting moisture, soil types, and geological features.

- Thermal IR: Enables heat mapping for fire detection or energy audits.

Choosing the correct annotation strategy depends heavily on which spectral bands are available and what insights are being targeted.

When to Use RGB Annotation 🖼️

RGB annotation is often the first step in building AI models for satellite image analysis. Here’s why:

Ideal for Human-Interpretable Tasks

RGB images are the most intuitive for annotators. They're ideal for tasks like:

- Urban mapping

- Road segmentation

- Building detection

- Traffic monitoring

Simplifies Labeling Complexity

Since RGB images mimic what we naturally see, object boundaries and features are easier to identify. This reduces training time for annotation teams and improves initial label consistency.

Lower Storage and Processing Overhead

RGB imagery generally consumes less bandwidth and storage compared to multispectral data, making it more accessible for training and inference in resource-limited environments.

When Multispectral Annotation Shines ✨

Multispectral data, while more complex, offers significantly deeper insights. Here's where it excels:

Environmental Monitoring

Multispectral annotation is critical for tasks such as:

- Vegetation index calculation (e.g., NDVI, NDWI)

- Soil classification

- Water quality monitoring

- Pollution mapping

For example, NIR and SWIR bands allow analysts to detect vegetation stress long before it becomes visible in RGB images.

Disaster Detection and Risk Mapping

Use cases include:

- Wildfire boundaries (thermal + SWIR)

- Flood detection (NIR + visible spectrum)

- Land degradation (long-term spectral trend analysis)

Multispectral annotation helps AI detect subtleties such as waterlogged soil or burnt vegetation, which RGB often misses.

Defense and Intelligence

Military applications use multispectral imaging to:

- Detect camouflaged assets

- Identify underground structures via thermal discrepancies

- Distinguish between natural and artificial materials

The extra bands become strategic assets in these use cases.

The Annotation Strategy Dilemma: RGB vs. Multispectral 🧠

Choosing between RGB and multispectral annotation isn't just about resolution or image size—it's about strategic alignment with your project’s goals. Every satellite AI application demands a thoughtful evaluation of what kind of visual data offers the most actionable insight. Sometimes, that means working with RGB images for speed and familiarity. Other times, it means leveraging multispectral inputs to surface complex environmental signals invisible to the naked eye.

Let’s explore the core dilemmas and trade-offs in detail:

🎯 Project Objectives vs. Spectral Requirements

A project focused on building footprint extraction in urban environments can be completed efficiently using RGB imagery alone. However, a task like crop disease detection, which requires early-stage diagnosis, absolutely demands multispectral annotation—particularly NIR and red-edge bands. Aligning spectral data to your project's end-use case ensures you’re not over-engineering (or underdelivering) your pipeline.

Ask yourself:

- Will visible spectrum suffice to solve the problem?

- Are there known spectral indicators (like NDVI) associated with your task?

🧩 Ease of Annotation vs. Depth of Insight

RGB is easier to annotate, train on, and validate. You can recruit a wider pool of annotators and get faster turnaround. In contrast, multispectral annotation often requires specialist interpretation, dedicated visualization tools, and more extensive QA workflows. This trade-off becomes more complex as datasets grow in size.

If timeline, budget, or workforce availability is tight, it might make sense to start with RGB, then layer multispectral insights as needed.

🗂️ Data Volume vs. Label Accuracy

Multispectral data increases the richness of input, but also expands:

- File sizes

- Preprocessing pipelines

- Label management complexity

That said, the added complexity is often offset by improved model performance—especially in nuanced tasks like:

- Differentiating healthy vs. stressed vegetation

- Identifying types of water bodies

- Detecting early-stage urban sprawl

With modern storage and compute power more accessible, the barrier to using multispectral data is shrinking—but strategic planning is still essential.

🔄 Model Flexibility vs. Annotation Investment

Models trained on RGB data are often easier to deploy across different domains (e.g., drones, mobile cameras, web apps), because RGB imagery is ubiquitous. Multispectral models, while highly specialized and accurate, may require more effort to port, retrain, or adapt to non-satellite datasets.

For instance:

- A multispectral model trained on Sentinel-2 imagery may struggle to generalize to RGB drone images unless well-prepared.

- Conversely, a generalist RGB model may lack the precision needed for ecological classification unless paired with auxiliary data.

Bottom line:

There’s no one-size-fits-all. The smartest annotation strategies blend speed, scale, and spectral specificity depending on the mission-critical needs of your AI system.

Labeling Challenges Unique to Multispectral Data 🌈

Unlike RGB, multispectral annotation introduces a layer of abstraction. Here are the common hurdles:

Interpretation Gap

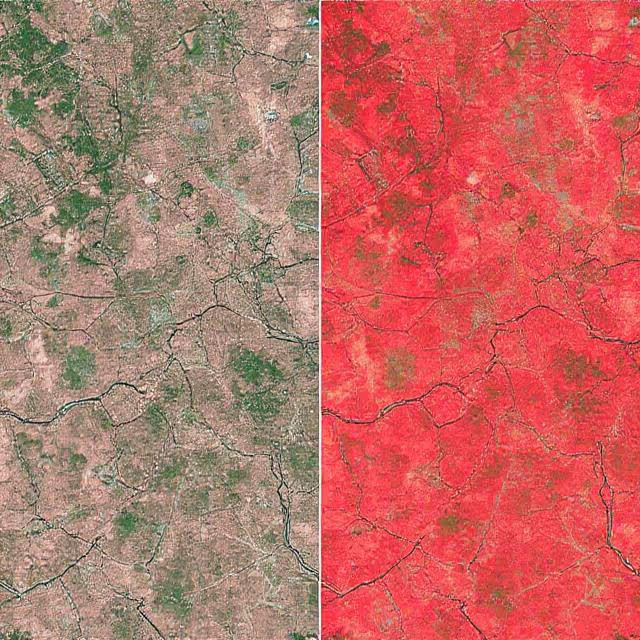

Many multispectral bands are not visually interpretable. NIR and SWIR data must be rendered as false-color composites, often using combinations like:

- Color Infrared (CIR): NIR as red, red as green, green as blue

- Urban False Color: SWIR, NIR, and green bands

This adds a cognitive burden for annotators unfamiliar with spectral mapping.

Band-Specific Preprocessing

Before annotation, multispectral images often require:

- Atmospheric correction

- Cloud masking

- Radiometric normalization

Failing to standardize preprocessing across images may lead to inconsistent annotations and reduced model generalizability.

Alignment and Registration Issues

Different bands may be slightly offset due to sensor misalignment. Without co-registration, labeling objects across bands becomes imprecise—especially problematic for pixel-level tasks like segmentation.

Higher Annotation Cost

Multispectral annotation typically requires:

- Specialist annotators or domain experts (e.g., agronomists)

- Pre-annotated indices to aid decisions

- Greater annotation time per image

As a result, the cost-per-label is higher compared to RGB annotation projects.

Cross-Referencing RGB and Multispectral Labels 📊

One promising strategy is to annotate in RGB and cross-reference multispectral data for added validation. This approach works particularly well in workflows such as:

- Dual-stage pipelines: where initial annotation is done in RGB, and multispectral is used to verify or refine results.

- Feature-level fusion: where AI models learn features from both RGB and multispectral annotations.

- Temporal analysis: where the same object is tracked across time using both spectral types.

However, this requires robust dataset synchronization, ensuring that both RGB and multispectral imagery align perfectly in terms of spatial resolution, scale, and geolocation.

Effective Labeling Techniques for Multispectral Imagery 🛠️

To annotate multispectral data efficiently, consider the following strategies:

Use Composite Image Visualizations

False-color composites can reveal hidden features. Annotators should be trained on:

- How vegetation appears in NIR

- How urban surfaces reflect SWIR

- How shadows distort thermal bands

Providing side-by-side views of RGB and multispectral bands helps bridge interpretation gaps.

Integrate Domain Expertise

For multispectral projects, collaboration with experts is crucial:

- Ecologists for land cover classification

- Agronomists for crop health annotation

- Hydrologists for water body labeling

This hybrid approach ensures that labels are not only visually consistent but scientifically valid.

Employ Multi-modal Annotation Interfaces

Modern annotation platforms like Encord and CVAT offer features for:

- Viewing multiple bands simultaneously

- Switching between false-color combinations

- Synchronizing annotation masks across channels

This avoids annotation silos and enhances consistency.

Optimizing AI Models with Spectrally Annotated Datasets 🤖

Annotated multispectral imagery is a goldmine for model training—if used correctly.

Spectral Feature Engineering

Preprocessing multispectral bands into indices like:

- NDVI (Normalized Difference Vegetation Index)

- NDWI (Water Index)

- NDBI (Built-up Index)

...can significantly improve AI model performance, especially for classification and segmentation tasks.

Training Hybrid Models

Combining RGB and multispectral channels as input into CNNs or transformers enables:

- Higher accuracy

- Greater generalization

- Cross-domain learning

Multispectral bands, when embedded into models as auxiliary data, often boost robustness to weather changes, occlusion, or seasonal variation.

Augmentation for Spectral Data

Standard data augmentation (flips, rotations) can be dangerous in multispectral imagery, as band consistency must be preserved. Use spectrally aware augmentation pipelines to maintain band alignment.

Real-World Use Cases That Showcase the Power of Multispectral Annotation 🚀

Multispectral vs. RGB annotation isn’t just theory—it’s playing out in real AI pipelines around the globe. Here’s how industry leaders, governments, and research teams are leveraging each strategy to solve urgent real-world problems:

🌾 Precision Agriculture: Diagnosing Crop Health Before It’s Visible

Companies like Sentera and SlantRange specialize in crop scouting platforms that combine drone and satellite imagery with advanced multispectral annotation. By labeling NIR and red-edge responses, they train AI models to:

- Detect nitrogen deficiencies

- Identify pest outbreaks

- Predict yield outcomes

This empowers farmers to intervene early, optimizing resource use and reducing crop loss. Without annotated multispectral data, many of these issues would only become visible when it’s too late.

Annotation tip: Crop boundaries are often annotated in RGB first, while stress zones are segmented using NIR-enhanced composites.

🌍 Climate Monitoring: Mapping Deforestation and Land Degradation

Organizations like Global Forest Watch rely on satellite data to detect illegal deforestation and monitor conservation areas. RGB imagery helps identify logging roads and canopy loss. Meanwhile, multispectral annotations of vegetation indices (e.g., NDVI drop-offs) allow AI systems to:

- Quantify forest health

- Detect early signs of desertification

- Classify land use transitions (e.g., forest to Agriculture)

In the Sahel region of Africa, multispectral annotation enables proactive strategies to halt desert creep.

Annotation tip: Annotate at seasonal intervals to capture land change dynamics over time.

🚨 Disaster Response: Detecting Flooded Areas and Burn Scars

During natural disasters, time is critical. Platforms like NASA Disasters Program use multispectral imagery to:

- Detect heat anomalies from wildfires (using thermal IR)

- Map flooding and water expansion (using NIR/SWIR bands)

- Estimate affected zones and infrastructure risk

These datasets are annotated in near real-time and fed into AI systems that help emergency teams prioritize evacuation routes, aid drops, and containment measures.

RGB alone often underestimates damage, especially in low-visibility conditions like smoke or standing water.

🛣️ Urban Development: Tracking Sprawl, Zoning, and Heat Islands

Urban planners in cities like Dubai and Singapore are leveraging annotated satellite datasets to monitor construction progress, analyze traffic patterns, and measure urban heat island effects. Here’s how they combine both annotation strategies:

- RGB for identifying new roads, housing units, and infrastructure.

- Multispectral for measuring surface temperatures, vegetation loss, and impervious surfaces.

Multispectral labels have helped identify neighborhoods most vulnerable to heat waves, guiding green space planning and public health interventions.

🛰️ Intelligence and Security: Detecting Camouflage and Hidden Assets

Defense organizations and security contractors rely on annotated multispectral data to enhance aerial reconnaissance. SWIR bands can distinguish synthetic materials (like tarps or tents) from natural foliage, while thermal IR helps detect body heat or engine activity—critical for locating camouflaged units or underground bunkers.

Labeling these features is sensitive and highly technical, often requiring:

- Multi-band comparison annotations

- Confidence scoring for low-visibility detections

- Mask overlays for obscured targets

AI models trained on such data are used for both real-time surveillance and historical pattern detection.

🚜 Insurance and Land Claims: Verifying Crop and Property Damage

Insurance tech platforms like CROPINSURE annotate both RGB and multispectral images to validate claims after droughts, hail, or pest infestations. NIR annotations help confirm vegetation loss, while RGB annotations show visible structural damage.

By integrating both, insurers can automate:

- Claim triage

- Fraud detection

- Payout estimations

This reduces settlement time and improves customer trust.

🌊 Coastal and Water Management: Tracking Erosion and Algal Blooms

For coastal cities and environmental NGOs, multispectral annotations of chlorophyll, turbidity, and sediment allow AI models to:

- Track shoreline erosion

- Monitor algal blooms

- Map marine protected zones

RGB images offer visual clarity, but it’s the multispectral bands that hold the keys to water composition and pollution signals.

These use cases demonstrate a central truth: the choice of annotation strategy directly impacts AI outcomes. RGB may be fast and easy, but multispectral annotation adds layers of meaning—especially in domains where nuance and foresight are non-negotiable.

Best Practices to Scale Multispectral Annotation Projects 🚧

- Start with RGB for base annotations, then refine using multispectral overlays.

- Choose platforms that support band toggling, composite views, and co-registered layers.

- Document label definitions clearly, especially when annotating by spectral features (e.g., NDVI thresholds for healthy vegetation).

- Validate labels with domain experts before using them for training.

- Don’t skip preprocessing—alignment, calibration, and normalization are non-negotiable.

- Monitor inter-annotator agreement, especially for bands not visually intuitive.

- Log false-color combinations used during annotation for reproducibility.

Let’s Bring Your Satellite AI Projects to Life 🌍

Multispectral and RGB annotation each bring unique value to satellite imagery analysis. While RGB offers simplicity and speed, multispectral annotation unlocks scientific precision and hidden insights. The most effective strategies often blend both—leveraging human intuition alongside spectral depth.

If you're building AI solutions for agriculture, environmental protection, or geospatial intelligence, having the right annotation strategy isn't optional—it's foundational. Your models are only as good as the data they learn from.

👉 Ready to elevate your satellite image annotations?

Let’s talk about how DataVLab can power your AI projects with expert labeling across RGB and multispectral datasets. Whether you're training for land cover classification or crop forecasting, we're here to help you annotate smarter, faster, and more accurately.

Explore our services or reach out to us directly.