Understanding the Role of Segmentation in Computer Vision

Semantic segmentation is the process of partitioning an image into meaningful regions so that a machine learning model can understand what each part represents. When researchers or engineers talk about how image segmentation works, they are referring to the set of operations that convert unstructured visual data into consistent and analyzable segments. These segments can represent organs, tissues, machines, vehicles, defects, agricultural crops, or any object of interest, depending on the application domain. Segmentation enables downstream tasks such as measurement, classification, anomaly detection, tracking, or clinical interpretation. Without reliable segmentation, computer vision models struggle to interpret complex scenes or anatomical structures in a consistent and clinically useful way.

Why Segmentation Matters in AI

High quality segmentation is essential because it defines the boundaries and shapes that AI models rely on to learn patterns. For medical imaging, accurately segmenting organs or lesions can influence diagnostic outcomes and treatment planning. In robotics or manufacturing, segmentation helps systems localize components, detect defects, or understand object geometry. In research environments, segmentation supports scientific quantification and the development of new algorithms. When segmentation quality is poor, model performance degrades, increasing the risk of errors, bias, or unreliable predictions. This makes segmentation a core foundation of trustworthy AI, particularly in regulated or safety-critical environments where consistency and transparency matter.

How Image Segmentation Works Inside an AI Pipeline

Preparing the Image for Segmentation

Before segmentation models can operate, images must be prepared carefully to ensure consistency and reduce noise. This stage typically includes preprocessing, normalization, resizing, channel adjustment, and occasionally metadata extraction. Imaging systems generate data in a wide variety of formats and resolutions, which means preprocessing is essential to create a standardized input domain for the model. Radiologists, imaging physicists, and AI engineers collaborate closely in medical settings because factors like contrast phases, slice thickness, and acquisition protocols can significantly affect how segmentation behaves. In industrial and scientific contexts, the same importance applies: changes in lighting, lens distortion, or sensor noise can influence model outputs.

How Features Are Represented in Images

Segmentation models work by analyzing pixel-level or voxel-level patterns. Each pixel carries value information representing color or intensity. In MRI, CT, ultrasound, or microscopy, these intensities correspond to underlying biological or physical properties. The model must learn how combinations of intensities, textures, shapes, and spatial relationships correspond to meaningful regions. Engineers often use data augmentation to help the model generalize to variations in lighting, orientation, or noise. Feature representation is one of the central concepts in explaining how image segmentation works, because the model learns to separate regions based on statistical patterns rather than explicit rules.

Classical Segmentation Approaches

Thresholding Methods

Thresholding is one of the earliest methods used to segment regions based on pixel intensity. It works by selecting a cutoff value that separates different parts of an image. While simple, thresholding provides insight into the basic logical structure behind segmentation: identify differences, define boundaries, and assign labels. However, thresholding often fails on complex clinical images, natural scenes, or multi-object environments because intensities vary widely and often overlap. Engineers may still use thresholding for pre-segmentation steps, such as separating foreground from background or isolating bright regions in microscopy.

Region-Based Methods

Region-growing, watershed techniques, and connectivity-based approaches attempt to divide images into cohesive areas based on similarity criteria. These methods were foundational in early computer vision research and are still used for specific tasks. Region-based methods help explain how image segmentation works in terms of spatial coherence: nearby pixels that look similar are likely part of the same structure. Although deep learning has largely replaced these approaches for complex tasks, their logic continues to influence modern algorithm design, particularly in hybrid workflows.

Edge-Based Segmentation

Before the rise of convolutional neural networks, many segmentation tasks relied on detecting edges that indicate boundaries between regions. Algorithms such as Canny or Sobel identify sharp intensity changes and assemble them into contours. These contours can help outline objects or anatomical structures. Although edge detection is often insufficient for high-quality segmentation on its own, it remains relevant in preprocessing steps and provides conceptual clarity into how boundaries emerge in images.

Deep Learning Segmentation: How Modern AI Models Work

Convolutional Neural Networks for Segmentation

Deep learning transformed segmentation through convolutional neural networks (CNNs), which automatically learn hierarchical representations of images. CNNs capture edges, textures, shapes, and semantic meaning without hand-crafted rules. Understanding how image segmentation works today requires understanding how CNNs extract low-level features in early layers and progressively build higher-level concepts in deeper layers. CNNs made it possible to differentiate visually similar regions with far greater accuracy than classical methods, especially in high-resolution medical imaging or complex scenes.

Fully Convolutional Networks (FCNs)

FCNs were a major breakthrough because they generate dense pixel predictions. Instead of outputting class labels for an entire image, FCNs produce a mask that assigns a label to each pixel. This architecture makes segmentation feasible at scale and allows models to process images of variable sizes. FCNs rely on an encoder stage that compresses information and a decoder stage that reconstructs detailed segmentation maps. This encoder-decoder pattern remains the foundation for many modern architectures.

U-Net and Its Variants

U-Net is one of the most influential segmentation architectures, originally developed for biomedical image segmentation. It introduced skip connections that allow the decoder to access high-resolution information from early layers, improving localization accuracy. Many state-of-the-art models still use U-Net principles because they address many common challenges in segmentation: small datasets, limited annotations, and fine-border accuracy. Variations like ResUNet, Attention U-Net, and nnU-Net extend these concepts with enhanced feature extraction or dynamic architecture optimization.

How Segmentation Models Learn

Training With Labeled Masks

Training a segmentation model requires datasets with pixel-level masks that define the correct regions. Annotators and clinical reviewers play a crucial role in producing these masks, which form the ground truth for learning. During training, the model compares its predicted masks with the true masks and adjusts its weights to reduce error. The process is iterative and uses loss functions such as Dice Loss, Cross Entropy, or Focal Loss to account for class imbalance or small anatomical structures. High-quality labels are essential because segmentation models are extremely sensitive to label noise.

Improving Model Generalization

To prevent overfitting, engineers use techniques such as augmentation, regularization, and careful sampling. In clinical imaging, cross-center variability is a major concern because scanners, protocols, and patient populations vary across institutions. This means that a model trained in one hospital may not generalize to another. Researchers use domain adaptation techniques, harmonization, and multi-site datasets to improve robustness. In industrial settings, lighting variations and production differences create similar challenges.

Evaluating Segmentation Performance

Evaluation involves quantitative metrics such as Dice Similarity Coefficient, Jaccard Index, and Hausdorff Distance. These metrics measure overlap, boundary accuracy, and shape consistency. Qualitative evaluation by domain experts is equally important because certain segmentation errors have clinical or operational consequences. Understanding how image segmentation works requires knowing how results are validated and how feedback loops guide improvement during model development.

How Image Segmentation Works in Practice

From Raw Input to Segmentation Mask

The entire segmentation pipeline typically follows these stages:

- Acquire the raw image.

- Preprocess and normalize it.

- Feed it into a trained model.

- Generate a segmentation mask.

- Refine the mask if needed.

- Use the segmentation output in downstream applications.

Each stage can involve significant technical nuance, and the effectiveness of each step influences the final mask quality. Engineers must fine-tune preprocessing, adjust model architecture, define appropriate hyperparameters, and validate outputs across diverse test conditions.

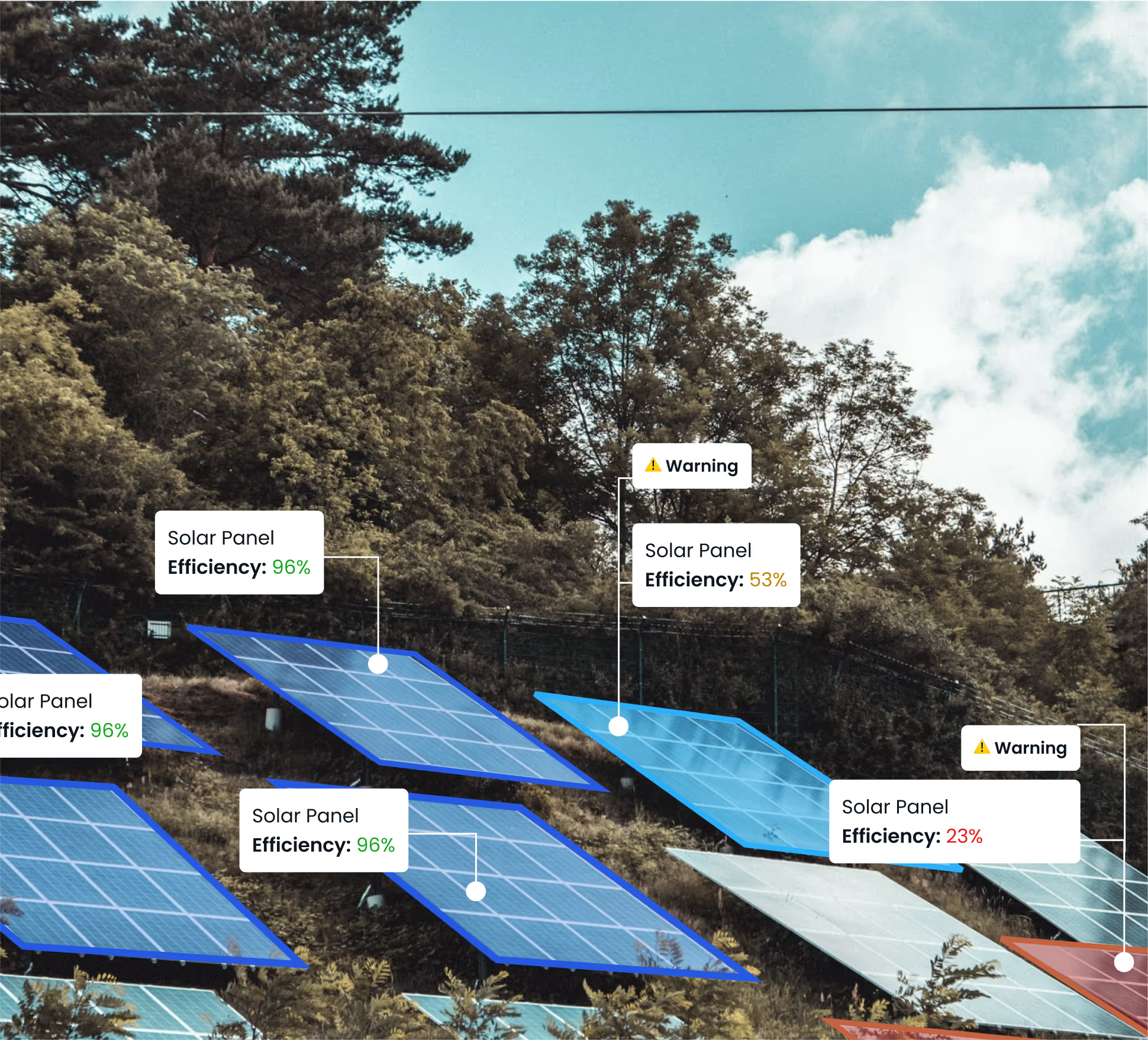

Real-World Applications

Segmentation is used widely across medical imaging, automated inspection, robotics, autonomous driving, and scientific research. In medical imaging, segmentation helps measure tumor volumes, isolate organs, generate 3D models, and assist radiologists in analyzing complex scans. In industrial contexts, segmentation identifies defects, tracks components, and monitors equipment. Researchers at institutions like Caltech and ETH Zurich’s Computer Vision and Learning Group continue to explore new segmentation techniques, especially for edge cases where classical methods fail or labeled data is limited.

Segmentation in Biomedical Research

Biomedical imaging relies heavily on segmentation across MRI, CT, PET, microscopy, and histopathology. Groups like the Center for Biomedical Image Computing and Analytics (CBICA) at UPenn develop segmentation methods that support brain mapping, cancer detection, and large-scale quantitative imaging. Institutions such as Karolinska Institutet use segmentation for clinical research on neurological diseases, cardiovascular imaging, and developmental biology. These applications highlight how segmentation bridges clinical care and scientific discovery.

Frontier Research and Emerging Methods

New segmentation methods use transformers, diffusion models, or hybrid architectures that combine CNNs with attention mechanisms. These models can capture global context more effectively and adapt to multi-modal input such as text-guided segmentation or cross-modal interpretation. Research from the University of Cambridge Machine Intelligence Lab and the Max Planck Institute for Intelligent Systems is advancing these frontiers. Their work explores better spatial reasoning, self-supervised learning, and shape-aware segmentation. These advances will help AI systems operate more reliably in clinical, industrial, and scientific contexts.

Would You Like Expert Support With Segmentation?

If you are working on an AI or medical imaging project, our team at DataVLab would be glad to support you.