Understanding the Relationship Between Image Segmentation and Object Detection

Image segmentation and object detection sit at the heart of modern computer vision. They are often discussed together because both are used to localize and identify objects within visual data. However, they do not provide the same level of detail and are not interchangeable. Object detection identifies and locates objects with bounding boxes. Image segmentation provides pixel-level understanding of shapes, boundaries, textures, and fine-grained structures. Successful AI product teams know exactly when each technique is appropriate and how they complement each other inside a larger perception stack.

The confusion often stems from the fact that both techniques appear in the same domains — autonomous driving, medical imaging, retail analytics, agriculture, robotics, and geospatial AI. Yet the annotation effort, dataset construction, QA workflow, and model performance expectations differ significantly. Poorly choosing between segmentation and detection early in model development can lead to inaccurate predictions, higher annotation costs, and wasted engineering cycles. This makes it essential to understand their fundamental differences before launching an AI dataset initiative.

What Object Detection Really Does in a Computer Vision Workflow

Object detection is designed to answer two questions:

What objects are present in this image?

Where are these objects located?

The output is a list of bounding boxes, each associated with a class label and a confidence score. The bounding box itself is a simplification — it encloses an object in a rectangular region of interest, regardless of its shape. This makes object detection extremely fast, efficient, and scalable across large datasets.

In practice, object detection is ideal when approximate localization is acceptable. Retail inventory systems only need to know where products are. Traffic detection systems only need coarse positions of vehicles or pedestrians. Drone footage analysis often benefits from speed over precision. Bounding boxes are also fast to annotate, which is critical when handling millions of frames.

Most detection models today originate from well-established research lines. Frameworks like YOLO, SSD, and Faster R-CNN became industry standards because they balance speed and accuracy. Datasets like Open Images support large-scale training and benchmarking with real-world variability. These foundations allow engineers to build detection systems quickly and deploy them with minimal computational overhead.

The Role of Image Segmentation in High-Precision Computer Vision

Image segmentation goes beyond identifying objects. It assigns a class to every pixel in an image. Instead of drawing boxes around objects, segmentation models map the exact shape and boundary of structures, surfaces, and materials.

This level of detail transforms simple perception tasks into full scene understanding. It also enables AI systems to reason about geometry, occlusions, fine edges, and tiny anomalies that bounding boxes cannot capture. Pixel-accurate localization is essential in medical imaging, robotics, manufacturing, and autonomous navigation. The difference between predicting the outline of a tumor, the edge of a pedestrian’s leg, or the contour of a weld line can be the difference between a safe model and a dangerous one.

The most common segmentation models today are based on architectures like U-Net, DeepLab, Mask R-CNN, and modern transformer-based variants. These models require richer, more detailed annotation data. Leading datasets such as Cityscapes provide fully pixel-labeled scenes that allow segmentation models to learn complex structures, shadows, depth cues, and object boundaries.

While segmentation is more resource-intensive than object detection, the payoff is significant when the application demands precision.

Key Differences Between Image Segmentation and Object Detection

To make the correct choice between segmentation and detection, teams must understand their fundamental differences and how these differences affect annotation strategy, model behavior, and deployment.

Precision Level

Object detection provides coarse localization using bounding boxes. This is often sufficient for counting objects, tracking them, or triggering alerts. Image segmentation offers pixel-level understanding and captures exact shapes, textures, and boundaries. Segmentation is indispensable for any application that requires detailed measurement or structure analysis.

Annotation Complexity

Bounding boxes are easy and fast to annotate. Segmenting objects requires masks that follow complex outlines. The annotation time per object is significantly higher. Teams must consider whether they need speed or accuracy at scale.

Computational Requirements

Detection models are generally lighter and faster than segmentation models. Segmentation requires more compute during training and inference. This can affect how and where the model is deployed, particularly in edge devices.

Model Generalization

Box-based detectors generalize well across varied real-world settings because they learn coarse visual patterns. Segmentation models must learn more detailed relationships, making them more sensitive to variations in lighting, textures, or camera conditions. This requires more robust data curation and QA.

Downstream Use Cases

Detection is ideal for recognition, tracking, and automation triggers. Segmentation supports measurement, augmentation, and precise visual reasoning. Misalignment between the chosen technique and the actual downstream requirement is one of the most common sources of model failure in computer vision pipelines.

When Object Detection Is the Right Choice

Object detection excels when speed, scalability, and approximate localization are the priority. It is perfectly suited for high-volume datasets where objects vary widely in shape, environment, and appearance.

Real-Time Retail and Inventory Analytics

In retail environments, detection supports product recognition, shelf analytics, and checkout-free systems. Boxes are enough to identify product presence, track movement, and trigger replenishment workflows.

Traffic Monitoring and Smart City Applications

Detection powers vehicle counting, pedestrian analysis, congestion monitoring, and speed estimation. These tasks rarely require pixel-level detail. Bounding boxes function well under different weather, angles, and camera qualities.

Industrial Safety Monitoring

Manufacturing environments use detection to identify helmets, vests, forklifts, and unauthorized zones. The objective is to detect the presence of safety equipment or hazards quickly, not measure their outline.

Robotics and Autonomous Drones

Drones can use object detection for obstacle avoidance, wildlife detection, crop monitoring, and infrastructure inspection. The benefit is stability and low computational cost during flight.

Large-Scale Real-World Datasets

Systems trained on datasets like the Open Images Dataset benefit from enormous variability and broad object classes. This creates models that generalize well under unpredictable conditions.

Large-scale benchmarks such as the KITTI Vision Benchmark Suite have shaped how detection systems are trained and evaluated in autonomous driving environments.

When Image Segmentation Is the Right Choice

Segmentation is the correct choice when understanding the exact shape, boundary, and spatial relationship of objects is essential. Many industries cannot operate safely or effectively without this level of precision.

Medical Imaging and Diagnostics

Segmentation is the foundation of tumor mapping, organ delineation, cell counting, and surgical planning. Every pixel matters. Coarse boxes would deliver unusable results. MRI, CT, ultrasound, and digital pathology all rely on fine-grained masks with strict QA requirements.

Autonomous Driving and Robotics

Segmentation powers lane detection, drivable area estimation, curb detection, sidewalk boundaries, and fine-grained understanding of road structure. Datasets like Cityscapes set the standard for pixel-accurate training data in these environments.

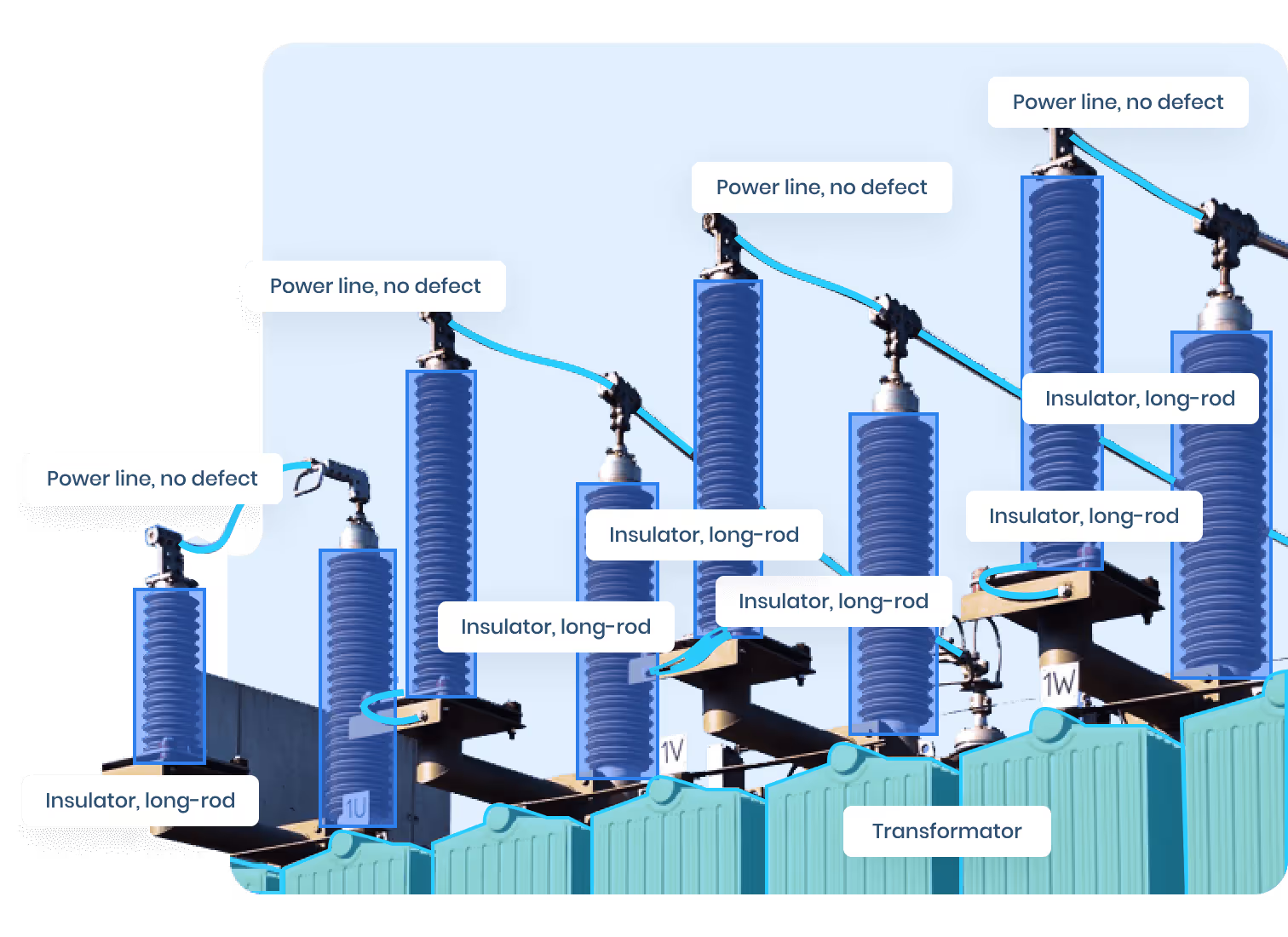

Manufacturing and Quality Control

Defect detection, surface analysis, and anomaly localization depend on segmentation. Bounding boxes cannot capture small scratches, weld inconsistencies, or micro-defects.

Agriculture and Environmental Monitoring

Segmentation enables precise leaf boundary extraction, canopy analysis, plant disease identification, and yield estimation. This level of detail is essential for high-precision agronomy systems.

Geospatial and Aerial Mapping

Satellite and drone imagery rely on segmentation for building footprint extraction, land classification, flood detection, and vegetation mapping. These tasks require pixel-level spatial boundaries.

How Modern Deep Learning Models Approach Detection vs Segmentation

The field of computer vision has evolved dramatically over the last decade. Today’s models differ fundamentally from early handcrafted-feature approaches. Modern architectures share similar backbones but diverge in their output structures, which is why understanding the difference between detection and segmentation matters more than ever.

Early techniques like SIFT, documented by the University of British Columbia, laid the foundation for feature-based perception long before deep learning models became dominant.

Backbone Networks

Whether performing detection or segmentation, models generally rely on convolutional or transformer-based backbones to extract visual features. These backbones analyze edges, corners, textures, colors, and patterns. They form the foundation for both detection and segmentation frameworks.

Detection Heads

Detection models add lightweight classification and regression heads. These predict:

- the object class

- the bounding box coordinates

- the confidence level

This keeps inference fast and efficient.

Segmentation Heads

Segmentation models add decoders that upsample feature maps back to the original image resolution. This produces pixel-wise classifications. Frameworks like U-Net and DeepLab use sophisticated skip connections or atrous convolutions to preserve spatial detail.

Multitask Models

Some modern architectures perform both detection and segmentation. Mask R-CNN is a classic example — it predicts bounding boxes and a segmentation mask for each object. More recent approaches, such as those developed for NVIDIA’s computer vision ecosystem, fuse these tasks into unified transformer-based pipelines.

Multitask learning provides flexibility but still requires segmentation-quality annotations for the mask component. This means teams must correctly budget annotation time and ensure data consistency across tasks.

Annotation Workflows for Object Detection vs Segmentation

Annotation quality directly determines how well models will perform in production. The workflows for detection and segmentation differ significantly, and teams must plan their workforce, QA process, and toolstack accordingly.

Bounding Box Annotation Workflow

Box annotation is straightforward. Annotators draw rectangles around each object class. The process is fast and easy to scale with a medium-sized workforce. The biggest challenge is maintaining consistent box tightness and ensuring that overlapping objects are correctly separated.

Because object boundaries are not required, QA is relatively simple. Common mistakes include partial boxes, oversized boxes, and mislabelled classes. These can be quickly detected by automated scripts or human reviewers.

Segmentation Annotation Workflow

Segmentation annotation is far more demanding. Annotators must trace object boundaries precisely, often at pixel-level resolution. This requires training, domain experience, and specialized tooling. In medical imaging, for example, annotators must understand anatomy, imaging modalities, and clinical rules.

Segmentation QA is also significantly more complex. Teams must:

- compare masks against reference standards

- measure overlap (IoU, Dice score)

- detect inconsistencies in contour shape

- validate fine structural details

This is why segmentation is usually assigned to trained annotators, medical specialists, or highly supervised annotation teams. The investment is high, but it is essential for pixel-level reliability.

Dataset Size, Diversity, and Augmentation Requirements

Because object detection and segmentation operate at different levels of precision, they also have different dataset requirements. Understanding these differences helps teams avoid underfitting or overfitting their models.

Dataset Size for Detection

Detection models scale well with large datasets. The more objects and environments the model sees, the better it generalizes. Datasets like COCO provide hundreds of thousands of labeled bounding boxes across dozens of classes. This sets a benchmark for real-world detection performance.

Augmentation for detection tends to be straightforward. Techniques like random cropping, flipping, and color jittering are generally sufficient.

Dataset Size for Segmentation

Segmentation models require fewer images but significantly more detailed annotations. Every pixel in every image is labeled. This means dataset creation is slower and more expensive. However, segmentation benefits from more advanced augmentation techniques such as:

- elastic deformations

- cutout and cutmix

- contrast and intensity variations

- synthetic masks

Because segmentation models rely heavily on boundary information, augmentations must be applied carefully. Over-processing images can distort shapes and produce unnatural masks.

Choosing Between Object Detection and Segmentation for Your AI Use Case

The best way to choose between detection and segmentation is to evaluate the exact business or engineering objective. Teams should ask three questions:

Do we need exact object boundaries or is approximate location enough?

If boundaries matter, segmentation is required.

Do we need the model to understand shapes, structures, or fine details?

If yes, segmentation is essential.

Will the system operate under strict safety or regulatory constraints?

Healthcare, automotive, robotics, and manufacturing usually require segmentation.

If the objective is counting, tracking, coarse localization, or alerts, detection is almost always sufficient. If the objective is measurement, medical analysis, safety-critical perception, or fine-grained analysis, segmentation is the only viable choice.

How to Combine Segmentation and Detection in One System

Some production systems require both approaches. For example:

- Autonomous vehicles use detection for vehicles but segmentation for lanes and drivable space.

- Medical imaging systems detect organs with bounding boxes before running segmentation.

- Retail systems use detection to find products and segmentation to analyze shelf space or packaging.

Combining these tasks requires a well-planned annotation strategy. Data must be synchronized across both modalities. Workforce roles must be defined distinctly. QA workflows must support both bounding box rules and segmentation accuracy metrics. Many modern toolstacks and models support hybrid outputs, but the underlying dataset design must be clean, consistent, and versioned.

Practical Examples Beyond Typical Use Cases

To bring the differences to life, it is worth highlighting less obvious examples that demonstrate where segmentation or detection is especially effective.

In insurance automation, segmentation supports damage assessment by isolating dents, cracks, and impacted regions. In marine robotics, segmentation helps underwater drones identify sediment types or coral structures in murky conditions. In rail transportation, segmentation supports precise rail defect detection where even millimeter-scale deviations matter. In construction, segmentation helps classify building materials and map progress across phases. Each application highlights the precision advantage of segmentation beyond typical AI marketing narratives.

Conversely, detection is invaluable in domains where speed and generalization matter most. Security analytics rely on bounding boxes for quick alerting. Wildlife monitoring uses detection for species identification in dynamic environments. Logistics tracking depends on detection for package flow and inventory counting. These systems benefit from the simplicity, speed, and robustness of bounding boxes.

Real-World Examples of Detection and Segmentation Datasets

To understand how industries benchmark and develop models, it helps to look at gold-standard datasets that shape research and production.

COCO Dataset

Widely used for object detection, segmentation, and keypoint estimation, COCO sets the benchmark for localization tasks. Its diversity and annotation detail allow researchers to evaluate models under realistic conditions.

Cityscapes Dataset

Designed for urban scene understanding, Cityscapes provides pixel-accurate masks for multiple classes, making it a foundational dataset for autonomous driving research.

Open Images Dataset

This large-scale dataset includes millions of images with detection, segmentation, and relationship annotations. It is particularly valuable for training models that must operate in highly variable real-world settings.

PyImageSearch Tutorials

Although not a dataset, PyImageSearch offers trusted practical guides for learning how segmentation and detection models work, making it a valuable resource for teams building prototypes or training internal staff.

NVIDIA Computer Vision Resources

NVIDIA maintains frameworks, sample models, and documentation that support both detection and segmentation, particularly for edge devices and GPU-optimized inference.

Many of the architectural advances that power modern detection and segmentation models originate from research published at CVPR, the leading global conference in computer vision. The Waymo Open Dataset provides a large-scale combination of 2D detection, 3D bounding boxes, and pixel-level segmentation, making it a reference point for autonomous vehicle research.

How to Future-Proof Your Annotation Strategy

Choosing between segmentation and detection today must also consider how your system will evolve tomorrow. Many AI teams begin with detection and gradually shift to segmentation as their product matures. This is common in industries like automotive, retail automation, and manufacturing. Teams should therefore plan a data roadmap that preserves dataset versioning, labeling guidelines, and QA infrastructure.

Future-proofing involves:

- clearly defining annotation guidelines early

- ensuring consistent class taxonomies

- maintaining dataset quality checks

- documenting every change in labeling logic

- planning incremental updates rather than full relabeling efforts

A well-designed dataset pipeline avoids costly re-annotation and ensures the model can evolve with new features.

Conclusion: Making the Right Choice for Your Vision System

Image segmentation and object detection are both essential to modern computer vision, but they serve different roles. Detection provides speed, scalability, and efficient localization. Segmentation provides precision, structure, and deep scene understanding. The right choice depends entirely on the problem your system must solve and the level of accuracy your model requires.

For teams building AI systems in robotics, healthcare, autonomous navigation, or high-precision industrial environments, segmentation is often the backbone of reliable perception. For teams building analytics systems, tracking pipelines, or real-time alerting mechanisms, detection offers unmatched speed and efficiency.

If you are preparing a dataset, planning an annotation strategy, or evaluating the feasibility of a segmentation or detection project, DataVLab can help you design a clean, efficient, and production-ready dataset workflow.