The Startup’s Vision: Smarter Micro-Mobility Through AI 🛴

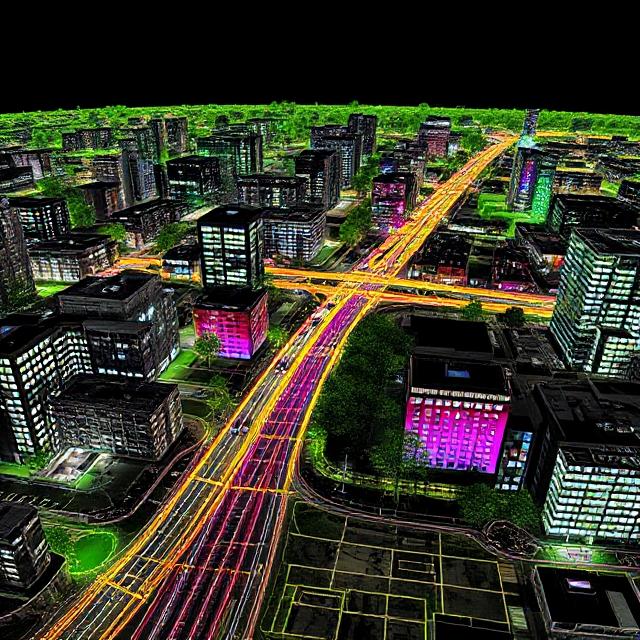

The client — a venture-backed startup based in Northern Europe — had a bold mission: redesign last-mile transportation with AI-powered micromobility solutions. Think e-scooters, autonomous sidewalk robots, and compact delivery vehicles that can understand their environment and make real-time decisions.

To make this vision a reality, they needed more than just a vehicle—they needed perception. And that required robust data:

- Annotated LiDAR point clouds

- Synchronized camera footage

- Accurate GPS timestamps

- Rich semantic labels

Off-the-shelf datasets like KITTI or nuScenes were too generalized. They didn't reflect the density, obstacles, or GPS jitter typical in historic urban centers with cobblestones, narrow alleys, and pedestrian clutter. The startup needed something custom.

Project Scope and Constraints: Balancing Ambition with Reality 🧭

The initial request was ambitious:

- Fuse 3D LiDAR scans with front/rear stereo camera data and IMU/GPS logs

- Annotate moving objects (cars, bikes, pedestrians) and static infrastructure (curbs, traffic signs, poles)

- Provide panoptic segmentation for critical zones like sidewalks and bike lanes

- Deliver 200 scenes from 5 cities within 3 months

🔍 But here's the reality:

- Data size per scene: ~2–4 GB uncompressed

- Fusion complexity: Frame alignment needed precise temporal sync

- LiDAR drift in narrow streets was a recurring issue

- Multiple sensors created misalignments that required constant calibration

To stay within budget and time, the scope evolved mid-project — a decision that ultimately saved the client from burnout and resource waste. We'll explain how.

From Raw Capture to Fusion-Ready Data: Setting Up the Pipeline 🔧

At first glance, collecting sensor data may seem like a straightforward task — drive the vehicle, gather recordings, and send the files to your labeling team. But in reality, transforming raw multi-sensor input into a fusion-ready, annotation-friendly dataset is a technically demanding process that touches hardware, software, and data engineering.

The urban mobility startup quickly realized that the fusion pipeline itself would be the linchpin of the entire project. Without a well-structured pipeline, even the best annotators would be slowed down by inconsistencies, missing frames, and synchronization errors.

Here’s how the team tackled the fusion challenge, step-by-step.

Multi-Sensor Hardware Setup: The Capture Vehicle

To accurately perceive the urban environment, the vehicle was equipped with a custom sensor rig that collected data in real-time. Key components included:

- LiDAR Sensor (Velodyne VLP-32C): Captured high-resolution 360° point clouds at 10 Hz, ideal for detecting 3D geometry in urban scenes.

- Stereo RGB Cameras: Dual 1080p front-facing cameras provided visual context and aided in semantic segmentation, especially useful in occluded or ambiguous zones.

- GPS with RTK Correction: Delivered centimeter-level location accuracy, crucial in dense urban areas with GPS shadow zones.

- Inertial Measurement Unit (IMU): Recorded pitch, yaw, and acceleration to aid in sensor fusion and correct GPS drift.

- Data Logger + Edge Compute Module: A compact onboard compute system timestamped, synchronized, and stored all incoming data with high bandwidth.

This setup allowed the scooter to capture high-fidelity spatial and visual information every second — generating approximately 2–4 GB of raw data per scene.

Sensor Synchronization and Calibration

Synchronization was not optional — even minor timestamp mismatches between LiDAR and camera frames could distort the projected overlays, leading to annotation errors and AI misalignment.

To solve this, the team implemented:

- High-frequency timestamp matching via ROS (Robot Operating System)

- Extrinsic calibration using chessboard targets for LiDAR-camera alignment

- Time synchronization protocols based on PTP (Precision Time Protocol)

- Dynamic recalibration procedures triggered every 2–3 days of capture to account for sensor drift due to vibration and temperature shifts

Every data stream (LiDAR, image, GPS, IMU) was individually timestamped and later merged using custom Python scripts that aligned frames on a per-millisecond basis.

Data Formatting and Storage

Once calibrated and time-aligned, the data was formatted for downstream use:

- LiDAR point clouds were stored in

.pcdand.binformats, compatible with visualization tools like Open3D and PCL. - Images were saved in lossless

.pngto preserve edge detail for annotation. - Combined metadata (including pose, heading, and frame index) was packaged into

.jsonand.yamlfiles per scene. - Scene segmentation tools split long captures into 30-second slices for annotation efficiency.

The output? A fusion-ready dataset, prepped for annotators and AI engineers alike — clean, synchronized, and semantically rich.

Annotating LiDAR Fusion: A High-Stakes Challenge 🎯

If setting up the pipeline was complex, annotating the data proved even more demanding. Labeling LiDAR fusion data is a different beast compared to simple 2D bounding boxes — you’re working in 3D space, with sparse points, moving targets, and environmental occlusions.

Annotation had to go beyond simple classifications. It needed to capture depth, movement, and geometry, while maintaining consistency across frames and sensor modalities.

Why LiDAR Annotation Is So Challenging

LiDAR provides depth information, but lacks texture. A tree and a pole might return identical point signatures. Glass surfaces, shiny cars, and tight alleyways often distort or erase points entirely. And unlike Image Annotations, where objects are visible in full color, LiDAR often captures partial silhouettes, especially for dynamic objects like cyclists weaving between parked cars.

Major challenges included:

- Sparsity in the periphery — lower LiDAR beam resolution meant small objects like dogs or traffic cones were underrepresented.

- Occlusions — parked vehicles or pedestrians behind street furniture were often missing data in LiDAR, requiring reliance on visual camera input.

- Sensor inconsistency — even with calibration, some frame pairs were misaligned, demanding manual realignment or annotation at the projected layer only.

Hybrid Annotation Pipeline: Merging Precision with Speed

To tackle these hurdles, the team adopted a two-stage annotation workflow:

1. LiDAR-First Segmentation

- Annotators used 3D visualization tools to segment raw point clouds.

- Objects were grouped based on geometric clustering and known spatial priors (e.g., the average height of a pedestrian).

- This process was slower but established a base 3D ground truth.

2. Image-Guided Refinement

- RGB projections of point clouds were reviewed to verify object boundaries, resolve ambiguities, and correct for missed occlusions.

- Annotators could zoom in on projected images to catch small details like stroller wheels or bicycle handlebars.

The combination of spatial precision and visual cues allowed the team to label even complex scenes with confidence — intersections with overlapping pedestrian flows, alleys full of parked scooters, or narrow one-way roads filled with delivery vans.

Annotation Format and Output

For each frame, annotations included:

- 3D Bounding Boxes: For moving objects like cars, bikes, and people.

- Instance Segmentation Masks: On projected images for semantic classes like road, curb, building.

- Object Metadata: Speed, orientation, and motion type (static vs. dynamic).

- Scene Context Tags: Whether the scene occurred during rush hour, rainy weather, or night time.

Each annotated frame was saved in a multi-layer format:

.jsonfor metadata and object-level tags.binfor raw point cloud segmentation.pngoverlays for QA visualizations

All assets were linked using a unified frame ID system and checked through automated consistency validators before QA review.

Building a Human-in-the-Loop QA Engine

Manual annotations were only the beginning. A human-in-the-loop quality assurance process was embedded to catch inconsistencies across time and modality.

Key QA layers included:

- Frame-to-frame continuity checks: To ensure an object wasn’t mislabeled halfway through its trajectory

- 3D-to-2D overlay review: Each LiDAR label was validated by projecting it into image space and confirming it matched visual boundaries

- Edge case escalation: Complex scenarios (e.g., reflections, occlusions) were flagged for expert annotator review

The QA team used custom dashboards that surfaced error-prone classes and scenes with missing metadata. This approach reduced rework by 37%, freeing up more time for new scene annotation.

Labeling Strategy: Segment Smart, Not Hard 🧠

Full-scene manual segmentation would’ve required thousands of hours — unscalable. So the team adopted a mixed strategy:

Semantic + Instance Hybrid

- Semantic segmentation was used for drivable areas, sidewalks, and bike paths

- Instance segmentation was used for dynamic objects like people and cars

Region-of-Interest (ROI) Prioritization

Instead of annotating all 360° data, focus was put on the front 120° cone, which matched the scooter’s navigation priority.

This significantly reduced labor hours without affecting model performance.

Smart Use of Pre-Labels and Model-Assisted QA ✅

To maintain accuracy without inflating costs, the team leveraged pre-trained AI models to generate rough masks and bounding boxes.

Here's how it worked:

- Mask R-CNN was used on camera frames

- Semantic scene completion models guided missing LiDAR patches

- Annotators received suggestions — not answers — for each frame

A separate QA layer validated label consistency across temporal frames. This reduced annotation revisions by 40%, speeding up delivery by nearly 3 weeks.

Label Governance: Revisions, Edge Cases, and Versioning 🔁

Urban environments throw curveballs: kids running, parked scooters, reflective glass, moving shadows. So, a versioning strategy was implemented.

Label Versioning System

- v1.0: MVP delivery with known limitations

- v1.1: Included extra segmentation for edge cases flagged in QA

- v2.0: Post-deployment feedback integrated into AI model retraining

Changes were tracked in a Git-based system with scene IDs and reviewer notes. Clients could trace any label back to the original annotator + QA reviewer.

Lessons Learned: What Future Teams Should Know 🧩

Every AI project teaches more than it solves. Here's what came out of this one:

✅ What Worked

- Hybrid annotation strategies cut hours without cutting corners

- Model-assisted labeling reduced fatigue and increased throughput

- Early feedback loops with AI engineers prevented dataset misalignment

⚠️ What Didn’t Work (at First)

- Full-scene labeling ambition broke under real-world constraints

- Sensor drift required more frequent recalibration than expected

- Narrow alleys created GPS shadow zones — mitigated only with IMU corrections

🔁 What Changed

The startup originally imagined a one-size-fits-all dataset. But they learned to prioritize critical perception zones, and plan for multiple dataset versions that evolve with the AI stack.

Impact: Beyond the Dataset 📈

This dataset wasn’t just a deliverable. It became the foundation of the startup’s AI pipeline.

- Enabled the training of object tracking and obstacle avoidance models

- Served as demo material for investor pitches and grant applications

- Was reused for internal testing in 3 new cities the startup expanded into

Most importantly, it gave the startup a proprietary edge. Unlike open-source sets, this one reflected their environment, their vehicles, and their use case.

Wrapping Up: Building Smarter, Not Just Bigger 🚀

Creating a LiDAR fusion dataset in an urban context is no small feat. But with smart scope management, pre-labeling workflows, and precise calibration strategies, even a small team can deliver a dataset that punches above its weight.

If your AI system needs to "see" the world the way your product does — don’t rely on generic datasets. Build your own, strategically.

Looking to build a multi-modal dataset tailored to your mobility or robotics project? Let’s talk about how we can help — from capture to QA.

👉 Reach out to DataVLab and let’s co-create the future of machine perception.