Introduction: Why Multi-Class Tumor Segmentation Is Critical to Healthcare

In recent years, artificial intelligence (AI) has surged as a transformative force in oncology, enabling earlier cancer detection, faster diagnosis, and more personalized treatment. At the core of these advancements lies one essential task: tumor segmentation.

But real-world medical data is complex. Tumors rarely exist in isolation. A single scan may contain multiple tumor types, grades, shapes, and tissue structures—all demanding multi-class segmentation for accurate modeling.

Unlike binary segmentation, which classifies pixels as tumor or non-tumor, multi-class tumor segmentation breaks down various tumor subregions, such as:

- Tumor core

- Necrotic area

- Edema

- Infiltrating margin

- Cystic components

- Specific tumor subtypes (e.g., glioblastoma vs. oligodendroglioma)

This article serves as a complete guide to navigating the challenges, annotation workflows, tools, and AI strategies for multi-class tumor segmentation. Whether you're building a clinical model or annotating WSIs in pathology, this content will help you design scalable, accurate workflows that meet real-world needs.

1. What Is Multi-Class Tumor Segmentation?

💡 Definition

Multi-class tumor segmentation is the process of labeling multiple tumor-related regions in a medical image—each with a distinct class label. Instead of producing a single binary mask, the AI model outputs a separate class for each region.

For example, a segmented brain MRI might include:

- Class 0: Background

- Class 1: Tumor-enhancing core

- Class 2: Edema

- Class 3: Necrotic tissue

🧠 Why It’s Important

- Provides a granular understanding of tumor structure

- Enables volume-based treatment planning (e.g., radiation targeting)

- Supports tumor grading and progression monitoring

- Useful in multi-modal imaging (CT, MRI, PET) where different components respond differently

📚 Learn how multi-class segmentation enabled brain tumor monitoring in the BraTS Challenge

2. Imaging Modalities Used in Multi-Class Segmentation

Multi-class tumor segmentation spans across multiple imaging types:

🧠 MRI (Magnetic Resonance Imaging)

Common for soft-tissue tumors (e.g., gliomas, prostate cancer). Different sequences (T1, T2, FLAIR) reveal tumor subregions.

🩻 CT (Computed Tomography)

Used for lung, liver, and colorectal tumors. Less contrast than MRI but widely accessible.

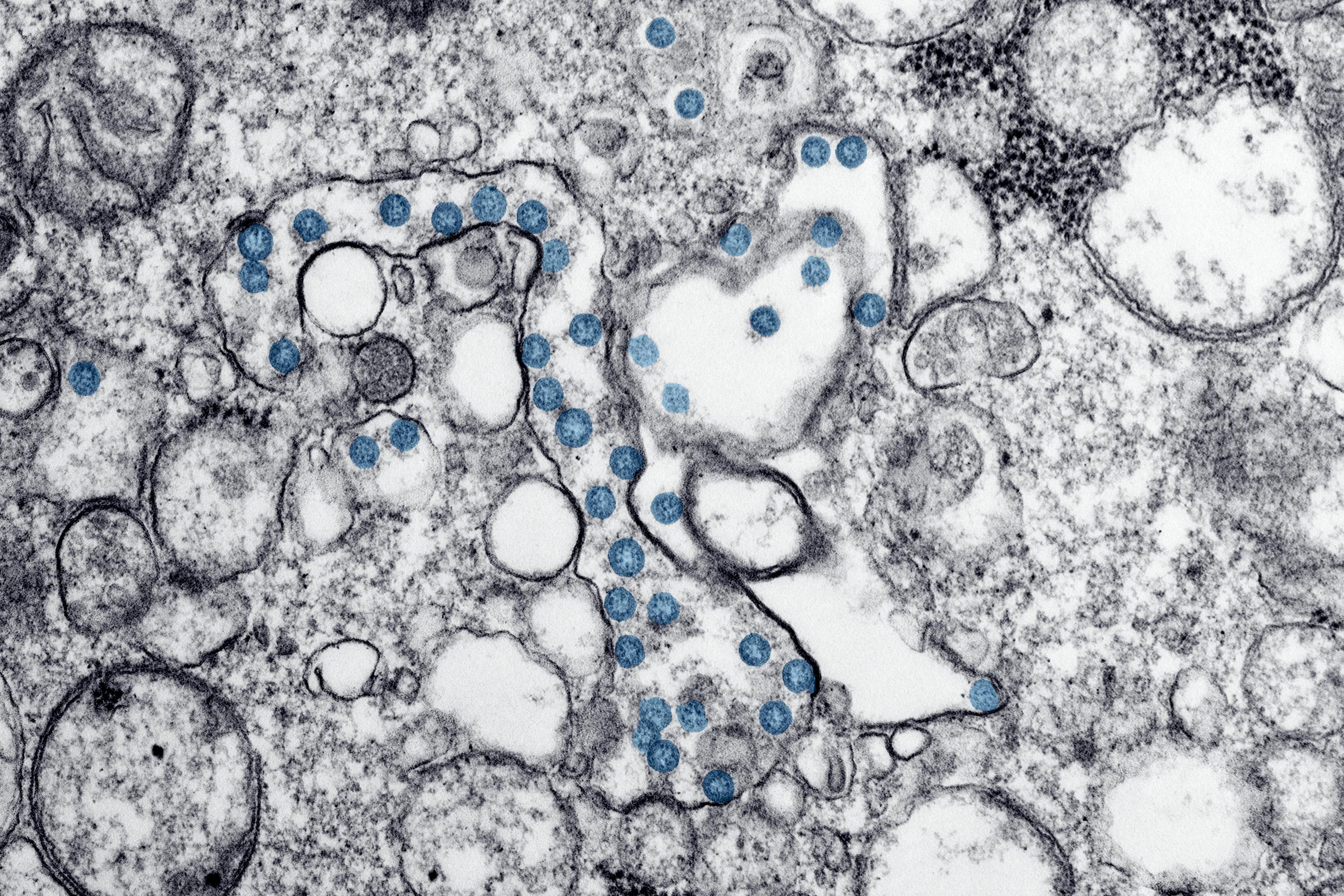

🧫 Histopathology (WSIs)

Used in breast, skin, and prostate cancers. Enables high-resolution segmentation of cell-level tumor components.

🔬 PET-CT / PET-MRI

Adds metabolic information, crucial for defining active tumor regions (e.g., FDG uptake in lymphoma).

🧾 For an open-source MRI dataset with multi-class tumor segmentation, explore BraTS 2021

3. Common Challenges in Multi-Class Tumor Segmentation

Despite its power, multi-class segmentation presents several technical, medical, and operational challenges:

⚠️ 3.1. Class Imbalance

Tumor classes such as necrosis may occupy <5% of the image volume, while edema may dominate. This imbalance can skew learning.

Solution: Use loss functions like Dice loss or Focal loss to give more weight to underrepresented classes.

🧩 3.2. Visual Similarity Between Classes

Subregions like edema and non-enhancing tumor can appear nearly identical, especially in low-resolution scans.

Solution: Annotate using multiple imaging modalities or sequences (e.g., T2 for edema, T1-Gd for enhancement).

🩸 3.3. Annotation Ambiguity

Even experienced radiologists and pathologists may disagree on where one class ends and another begins.

Solution: Build a QA loop with adjudication, or compute inter-annotator agreement (e.g., Cohen’s Kappa).

🧠 3.4. Multi-Modality Alignment

MRI, CT, and PET may be misaligned, leading to class mismatch across images.

Solution: Use registration tools like ANTs or ITK to align modalities before annotation.

🖼️ 3.5. Data Volume and Processing

Gigapixel pathology slides and large volumetric scans require tiling, patch extraction, and hardware acceleration.

Solution: Use tile-based annotation platforms and hybrid CPU/GPU pipelines.

📚 This MIT paper discusses class imbalance in tumor segmentation and how synthetic augmentation helps.

4. Annotation Strategies for Multi-Class Tumor Segmentation

Precision begins with labeling. Here's how experts approach complex, multi-class tumor annotation:

🖊️ 4.1. Polygonal Annotation

Used when outlining irregular tumor shapes—especially helpful for histology or dermatopathology images.

- Ideal for non-rectangular tumor boundaries

- Labor-intensive but highly accurate

- Supported by tools like QuPath, Aiforia, and Labelbox

🟪 4.2. Semantic Segmentation (Pixel-wise Masks)

Each pixel is labeled with a class, making this method ideal for training CNNs on volumetric data.

- Common in MRI and CT slices

- Usually done using semi-automated workflows

- Mask arrays stored in formats like

.npy,.png, or DICOM-SEG

🧩 4.3. 3D Volumetric Segmentation

Especially relevant for brain, liver, and lung cancers. Annotators work across slices to maintain class consistency in 3D space.

- Enables accurate tumor volume estimation

- Used in platforms like ITK-SNAP or 3D Slicer

- Exported in NIfTI or DICOM format

📍 4.4. Instance Segmentation

Each tumor region is segmented and labeled separately—even if it's the same class. Crucial for distinguishing between multifocal tumors.

🔁 4.5. Model-in-the-Loop Annotation

AI provides initial segmentations, which are corrected by humans. Ideal for speeding up large multi-class datasets.

- Feedback loop enhances future predictions

- Used in tools like Encord Active or Supervisely Auto-Labeling

- Reduces time by up to 60% in complex datasets

📘 Read this DeepMind case study on model-in-loop annotation in breast cancer AI.

5. Tools and Platforms Supporting Multi-Class Tumor Labeling

Here are battle-tested tools that support multi-class segmentation workflows across medical imaging types:

💻 Tool Examples:

- 3D Slicer – Open-source; ideal for 3D MRI/CT tumor annotation

- ITK-SNAP – Intuitive GUI for 3D medical image segmentation

- QuPath – WSI-focused, used in histopathology

- Labelbox / Encord – Enterprise-grade with AI-assisted labeling

- MONAI Label – Real-time AI model-in-the-loop annotation

🔗 For DICOM-native tools, see the OHIF Viewer used in research hospitals worldwide.

6. AI Model Architectures for Multi-Class Tumor Segmentation

To perform multi-class segmentation, you'll need models capable of multi-label output. Here are some popular architectures:

🔬 6.1. U-Net and Variants

- U-Net, Attention U-Net, U-Net++

- Great for biomedical segmentation (2D and 3D)

🧠 6.2. DeepLabV3+

- Uses atrous convolutions for multi-scale segmentation

- Performs well on multi-class tissue prediction

🧬 6.3. nnU-Net

- Self-configuring framework; was a top performer in BraTS and KiTS competitions

- Excellent for brain and kidney tumor segmentation

📦 6.4. Transformers for Segmentation

- Models like SegFormer or TransUNet offer long-range dependencies

- Useful in histopathology where fine-grained texture matters

🧪 Check out MONAI for PyTorch-based tumor segmentation pipelines.

7. Clinical and Research Use Cases

🧠 7.1. Brain Tumor (Glioma, GBM)

- Segmentation of core, edema, necrosis

- Tracks tumor progression over time

- Used in BraTS, FeTS competitions

🩺 7.2. Prostate Cancer

- Multi-class Gleason grading

- Core vs. periphery segmentation

- Used in AI-powered pathology tools

🫁 7.3. Lung Cancer

- Differentiates solid, semi-solid, and ground-glass opacities

- Tracks response to targeted therapies

🧫 7.4. Breast Cancer

- IDC vs. DCIS classification

- Tumor vs. stroma vs. immune segmentation

- Crucial for HER2 and ER testing workflows

🔬 7.5. Liver and Pancreatic Tumors

- Multi-phase CT segmentation

- Portal venous vs. arterial phase analysis

📖 TCGA and TCIA are excellent sources of annotated multi-class tumor data.

8. Quality Assurance and Compliance Considerations

- Use consensus annotation from multiple specialists

- Track inter-annotator agreement (Cohen’s Kappa, Dice score)

- Ensure anonymization per HIPAA guidelines

- Follow GDPR for any EU-based patient imaging

✅ Build automated QA checks into your pipeline to reduce manual review errors.

9. Trends & The Future of Multi-Class Tumor Segmentation

🌐 Federated Learning

Hospitals train AI models on local multi-class tumor data without data transfer—protecting patient privacy.

🧪 Synthetic Data

GANs and diffusion models are used to create synthetic tumor samples for underrepresented classes.

🧠 Weak Supervision

Uses rough annotations or slide-level tags to generate pixel-level segmentations, reducing annotation cost.

🧩 Stain Transfer & Normalization

Ensures AI generalizes across histopathology slides with different color profiles.

🔬 Explore FedTumor Segmentation Challenge to see federated learning in action.

📌 Work with Experts in Multi-Class Tumor Segmentation

Need help building or annotating multi-class tumor segmentation datasets?

At DataVLab, we specialize in:

- Tumor annotation in radiology and pathology

- Model-in-loop segmentation workflows

- 3D volumetric labeling and QA

- Compliant, expert-led data pipelines

📩 Contact us to accelerate your medical AI roadmap with expert-annotated, high-performance tumor data.