The Landscape of Autonomous Driving Is Not One-Size-Fits-All

Building a safe and reliable autonomous vehicle (AV) means preparing it to operate in all kinds of environments—from traffic-dense downtowns to remote farm roads. But training AI perception models for such versatility starts with one key step: scene annotation.

Annotation involves labeling objects and contextual elements in camera images or sensor data. These labels teach the AI what to look for and how to interpret its surroundings. However, the complexity and semantics of what needs to be labeled shift drastically between urban and rural scenes.

That’s why annotation strategies must evolve with the landscape.

Why This Matters: Context Is Everything 🧠

Urban and rural environments differ not just in what appears on the road, but in how things behave, how often they change, and how interpretable the scenes are to an AI system. Without precise annotation strategies tailored to each setting, datasets risk becoming skewed or incomplete, leading to poor generalization in production models.

Let’s break down how and why.

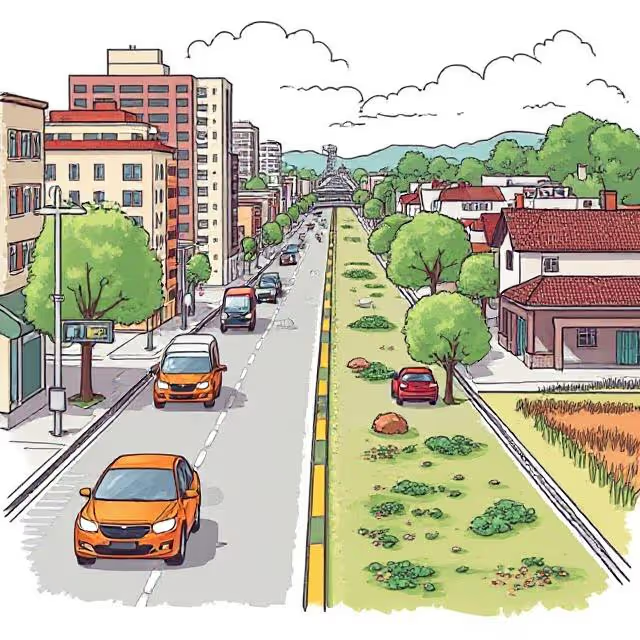

Scene Complexity in Urban Environments 🏙️

Urban environments present some of the most challenging visual and contextual scenarios for autonomous vehicles and data annotators alike. Far from being straightforward, these settings contain an overwhelming density of objects, unpredictable movement patterns, and ever-changing infrastructure.

High Object Density and Overlap

A single frame in a downtown environment might contain:

- Dozens of vehicles with varying motion states (stopped, turning, parking)

- Pedestrians crossing at and outside of designated zones

- Delivery workers on bikes and scooters zigzagging between lanes

- Dogs on leashes, shopping carts, baby strollers — often close to or within the street

These objects often occlude one another. For instance, a stroller might be partially hidden behind a parked SUV, or a cyclist could vanish momentarily behind a bus. Annotators must make precise judgments about object boundaries and visibility. Depth perception becomes a challenge, especially in 2D image datasets where occlusion misleads bounding boxes or masks.

Architectural and Lighting Complexity

Urban canyons formed by tall buildings cause:

- Sharp shadow contrasts, confusing object detection algorithms

- Reflective surfaces (e.g., glass facades) that can mirror objects, leading to ghost detections

- Variable lighting from neon signs, headlights, and traffic signals that change by the second

Annotation must include context clues such as whether a pedestrian is within a shadowed area or whether reflections are present in a scene, which affects how AI models interpret visibility and motion.

Chaotic Micro-interactions

Cities rarely follow strict road etiquette. Annotators may encounter:

- Taxi doors swinging open unexpectedly into bike lanes

- Skateboarders riding in traffic

- Food trucks double parked next to fire hydrants

- Police or emergency vehicles running sirens and swerving unpredictably

Capturing these real-world anomalies requires frame-by-frame attention and sometimes annotating behavioral cues (e.g., sudden deceleration, hazard light activation).

Infrastructure Overload

Urban spaces feature overlapping road systems: bike lanes, bus-only lanes, tram tracks, parking lanes, and pedestrian zones often intersect. Each of these needs its own label, boundary, and sometimes class hierarchy (e.g., active vs inactive lanes). There’s also the need to capture regulatory elements:

- Road signs (some partially obstructed)

- Temporary construction signage or cones

- Digital traffic signs or LED indicators

If these elements are missed, the model may misinterpret priority rules or traffic constraints — a costly mistake in real-world driving.

The Quiet Complexity of Rural Scenes 🌾

While rural scenes might appear “cleaner” due to less visible congestion, they introduce a completely different set of difficulties that make them equally, if not more, challenging to annotate and model for AV systems.

Lack of Delimiters and Structure

In rural areas, clear road markings are often absent:

- No painted lane dividers or edge lines

- Road shoulders may blend into grassy fields or ditches

- Drivable space isn’t always obvious to the human eye, let alone an AI

Annotators are forced to make subjective decisions about what constitutes the road boundary. These decisions need consistency across thousands of frames, which is hard to maintain without precise labeling guidelines.

Unusual Obstacles and Road Users

Rural areas introduce atypical but high-risk objects:

- Tractors, combine harvesters, and horse-drawn carts

- Wildlife like deer, boars, or dogs crossing unpredictably

- Stationary hay bales, fallen tree branches, or irrigation pipes

These objects are often rarely seen in training datasets yet pose significant risk. Annotators must label them even when they’re visually faint, partially obstructed, or far from the vehicle, since AVs must react to them well in advance.

Environmental Extremes and Terrain Diversity

Rural settings often experience:

- Steep gradients, potholes, and winding paths

- Unpaved roads, gravel, mud, sand, or snow-covered surfaces

- Seasonal changes that make the same scene look dramatically different month to month

A road in summer may be lined with thick vegetation, but in winter, covered with ice and reflective snow glare. Annotators may need to reclassify scene elements based on time-of-year context, which is not common in urban data.

Informal Infrastructure and Behavior

Many rural areas feature:

- Makeshift signage (e.g., handwritten signs or symbols painted on barns)

- Informal intersections without stop signs

- Road-sharing between vehicles, pedestrians, and livestock

This introduces a cultural and regional dependency to annotation. For instance, a local path may function as a road but won’t be marked on any map or have formal signage. Annotators need both local understanding and a way to communicate these “informal semantics” into structured label formats.

Annotation Priorities by Environment

Different geographies change what matters most in your labels.

Urban Priorities:

- Crosswalks, pedestrian zones

- Traffic light states

- Vehicle interactions in congestion

- Street signs and lane designations

- Sidewalk vs. road delineation

Rural Priorities:

- Drivable area segmentation (in absence of clear lanes)

- Wildlife detection (e.g., bounding boxes for deer)

- Terrain labeling (pavement, gravel, mud)

- Road edge or drop-off awareness

- Farm vehicles and atypical obstacles

Without adjusting label classes accordingly, rural data risks being oversimplified and under-informative.

Bias in Dataset Composition

Many leading datasets (e.g., Cityscapes, KITTI, nuScenes) focus on cities, while rural scenes are sparse and under-annotated. This creates hidden risks:

- Overfitting to structured environments

- Failing edge-case detection in real-world deployments

- Bias in perception confidence thresholds for empty roads vs. busy intersections

To build reliable AVs, teams must balance datasets not just by number of images but by:

- Environmental diversity

- Label complexity

- Time of day, weather, and seasonal variation

Synthetic data can help (e.g., using CARLA Simulator), but only if used carefully to match real-world domain characteristics.

Cultural and Regional Specificity Matters

A “rural road” in Sweden is not the same as one in India. Similarly:

- European city streets often lack center lines and have complex turn priorities

- In some regions, roads are shared with animals or have informal rules

Annotation strategies must be localized:

- Label taxonomies should account for regional signs and driving behaviors

- Annotators need training materials with culturally accurate examples

- Feedback loops with regional experts can prevent systemic mislabeling

🗺️ Localization is not just about translation—it's about interpreting context.

The Real Struggle: Label Consistency in a Messy World

Let’s say you train your AI with:

- Urban samples where sidewalks are clearly marked

- Rural samples with no sidewalk at all

What happens when the system sees a shoulder of the road? Is it:

- A drivable area?

- A walking path?

- Undefined terrain?

These ambiguities break down AI performance unless label ontologies and definitions are exhaustively clear and consistently applied.

Solutions:

- Regular cross-validation audits

- Clear labeling manuals with edge-case examples

- AI-assisted pre-labeling to reduce human drift

People Matter: Why Annotator Expertise Counts

Your annotators aren’t just “clickers”—they’re your model’s first teachers.

When dealing with complex environments:

- Provide role-based training (e.g., urban vs. rural specialists)

- Show real driving footage for context comprehension

- Involve them in feedback loops with your model performance team

Crowdsourced labeling with no domain filtering can result in:

- Misclassification of terrain or signage

- Missed edge-case events

- Unreliable model behavior downstream

🔗 Related: How Scale AI manages edge-case labeling

Blended Training for Real-World Adaptability

Rather than train separate models for each environment, aim for adaptive perception systems. This involves:

- Curriculum learning: Training the model to progress from easy (urban daytime) to hard (rural night fog)

- Domain adaptation: Using techniques like image-to-image translation to make urban and rural features visually interchangeable during training

- Scene-aware augmentation: Adding fog, snow, dust, or lens flares to simulate environment stressors

This improves generalization and lets models handle real-world variations with more confidence.

Let's Build AI That Understands Every Road 🚗🌲

Annotation is the first step toward autonomous intelligence. If we want vehicles to operate safely everywhere, then our datasets—and how we annotate them—must reflect everywhere.

- Don’t underestimate rural annotation just because it looks “simple.”

- Don’t rely too heavily on urban data just because it’s abundant.

- Do build smarter pipelines that flex with terrain, culture, and complexity.

At DataVLab, we specialize in scalable, human-in-the-loop annotation for both high-density urban scenes and nuanced rural environments. Whether you're training an ADAS system or labeling edge-case scenarios for global deployment—we're here to help.

👉 Ready to build smarter datasets? Let’s work together to annotate the roads less traveled.

Keep Exploring

Here are a few datasets and studies that bridge the gap between urban and rural training data: