Why Deep Learning Transformed Medical Image Segmentation

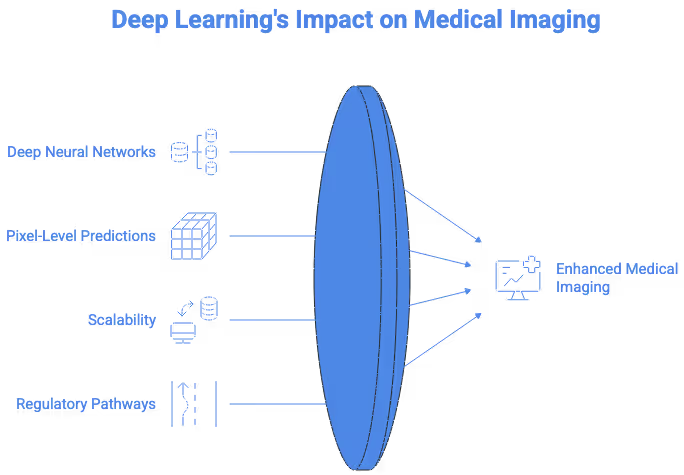

Deep learning reshaped medical image segmentation by offering a level of performance, consistency, and automation that classical computer vision methods could not achieve. Traditional segmentation relied on thresholding, region growing, or handcrafted features, which struggled with the complexity of real clinical images. Deep neural networks instead learn directly from large collections of annotated scans, enabling them to capture subtle texture changes, irregular anatomical boundaries, and rare pathological patterns. This makes them particularly effective for structures that appear differently across patients, scanners, and modalities.

Deep learning also supports pixel-level predictions, allowing models to classify every voxel in a CT or MRI volume. This level of granularity is crucial for tasks such as tumor contouring, vessel delineation, edema detection, or organ-at-risk mapping in radiotherapy. Radiologists benefit from faster workflows, while researchers gain access to consistent and reproducible ground truth data. Clinical applications are strengthened by the model's ability to generalize beyond simple morphological cues, instead relying on hierarchical and abstract visual features learned during training.

Another major advantage is scalability. Once trained, deep learning models can process thousands of studies with high consistency, supporting population-level research and large retrospective analyses. In medical image segmentation deep learning pipelines, this scalability is essential for radiomics, longitudinal disease modelling, and clinical trials that involve large imaging datasets. For healthcare systems with increasing imaging volumes, deep learning helps reduce manual workload and supports more standardized diagnostic decisions.

Regulatory pathways also benefit from deep learning segmentation because high-quality masks provide measurable, reproducible metrics. Agencies rely on quantitative evidence when evaluating AI-based medical devices. Segmentation data therefore becomes a foundation for evidence generation and validation across diverse patient populations. The combination of precision, scalability, and clinical relevance explains why deep learning has become central to modern medical imaging workflows.

Core Architectures Powering Modern Segmentation Models

U-Net and Its Evolution Across Modalities

The U-Net architecture remains the backbone of deep learning segmentation due to its efficiency and strong inductive biases for medical data. Its encoder-decoder structure captures global context while preserving fine spatial detail through skip connections. The original U-Net was designed for biomedical microscopy, yet its principles translate extremely well to semantic segmentation medical images across CT, MRI, ultrasound, and histopathology.

Researchers have extended U-Net to address modality-specific challenges. For MRI, 3D U-Net variants allow full-volume processing and better handling of anisotropic voxel sizes. For CT, architectures incorporate intensity normalization and multi-scale feature extraction to capture subtle boundaries such as liver metastases or pulmonary nodules. For pathology, U-Nets adapted with attention gates help differentiate nuclei in dense tissue regions. These advancements demonstrate how foundational the U-Net family has become in medical segmentation research.

Transformer Models and Hybrid CNN-Transformer Pipelines

Transformer-based architectures introduced global self-attention into segmentation models, enabling better capture of long-range dependencies. This is particularly useful for structures with thin, branching shapes such as vessels or neural pathways. Models like UNETR and Swin-UNet integrate attention mechanisms to process large 3D volumes while mitigating the limitations of convolutional receptive fields.

Transformers help overcome common failures seen in CNNs, such as over-smoothing small lesions or missing long contiguous structures. By integrating global context, these models produce more anatomically coherent masks. Hybrid architectures that combine convolutions with attention blocks often provide the best tradeoff between localization accuracy and computational efficiency. As datasets grow larger, transformer-based segmentation will continue to expand in clinical and research settings.

Researchers at institutions like the MIT CSAIL Medical Vision Group continue to refine transformer-based segmentation methods for complex anatomical structures.

Self-Supervised and Weakly Labeled Training Strategies

Medical imaging faces chronic data scarcity, and many datasets lack voxel-level labels. Self-supervised learning offers a solution by allowing models to pretrain on large volumes of unlabeled scans using reconstruction tasks or contrastive learning. This reduces dependence on manually created segmentation masks and improves generalization across institutions.

Weakly supervised methods let models learn from image-level labels, bounding boxes, or scribbles rather than full masks. While these labels contain less information, deep learning models can still extract surprisingly precise boundaries, especially when guided by anatomical priors. These approaches are increasingly important in segmentation in medical images research, where acquiring detailed labels for rare diseases or complex pathologies is difficult.

How Deep Learning Learns From Medical Images

Loss Functions for Anatomical and Pathological Structures

Loss functions guide models toward anatomically accurate predictions. In medical segmentation, Dice loss remains one of the most important because it directly optimizes overlap between predicted and reference masks. This is vital for small structures such as tumors, aneurysms, or polyps. Cross entropy loss complements Dice loss by encouraging correct voxel classification, while focal loss helps models focus on hard examples.

Boundary-aware losses address the challenge of ambiguous borders. These losses highlight edges and force the model to refine boundaries in regions where contrast is low. For tasks like cardiac segmentation or MRI tumor core delineation, boundary-sensitive losses significantly improve precision. The choice of loss function can dramatically influence a model’s behavior, especially when image characteristics vary widely across modalities.

Data Augmentation Tailored to Medical Imaging

General augmentation strategies are insufficient for clinical imaging. Medical imaging requires modality-specific transformations that mimic real variations. In MRI workflows, intensity shifts, bias field augmentation, and motion simulation help teach models to handle scanner differences. For CT, variations in windowing, noise levels, and low-dose artifacts create more realistic inputs. Elastic deformation is particularly beneficial for tissues that naturally vary in shape, such as abdominal organs.

These augmentations increase robustness and reduce overfitting, making segmentation models more reliable across diverse imaging environments. When developing image segmentation MRI pipelines, domain-specific augmentation becomes essential for consistent generalization.

Academic centers such as the Johns Hopkins Medical Image Analysis Lab have demonstrated how specialized loss functions can improve segmentation of subtle lesions.

Handling Class Imbalance and Rare Lesions

Many clinical cell segmentation tasks involve severe class imbalance. Tumors, microbleeds, or ischemic lesions occupy only a tiny portion of the scan. Without careful handling, models can ignore these structures entirely. Solutions include patch-based sampling, minority class oversampling, and multi-stage networks that first localize structures before segmenting them in finer detail.

Rare lesion detection is especially challenging in MRI and PET. Researchers often rely on curated datasets from sources like the TCIA (The Cancer Imaging Archive) to cover a broader distribution of disease types. Advanced sampling strategies help ensure the model dedicates enough capacity to clinically significant but small structures without compromising performance on large organs.

Applying Deep Learning to MRI, CT, and Multimodal Workflows

MRI Segmentation Deep Learning Workflows

MRI imaging poses unique challenges for segmentation because intensity values are not standardized across scanners or protocols. Deep learning models must therefore learn to work with variable contrasts, including T1, T2, FLAIR, diffusion imaging, and post-contrast sequences. Intensity normalization and histogram matching help stabilize model behavior, but architectural adaptations also play an important role.

For tasks such as brain tumor segmentation, models must identify edema, necrotic core, and enhancing tissue, each with distinct MRI signatures. Deep learning excels by learning multi-channel fusion across MRI sequences, which improves diagnostic reliability. Motion artifacts, coil variations, and sequence-specific noise require models to develop robust representations. Because MRI is widely used in neurology and oncology, deep learning segmentation continues to grow rapidly in this modality. MRI datasets hosted on platforms such as OpenNeuro are widely used to evaluate deep learning segmentation methods across multiple contrasts and acquisition conditions.

CT-Based Organ and Lesion Segmentation

CT imaging offers consistent intensity values via Hounsfield units, but segmentation remains challenging due to low contrast boundaries between adjacent soft tissues. Deep learning models address this using multi-scale feature extraction and attention mechanisms that emphasize organ-specific textures. Models trained on abdominal CT must distinguish liver, pancreas, gallbladder, spleen, and vasculature despite overlapping intensities.

High-resolution CT is essential for lung lesion detection, emphysema quantification, and airway segmentation. Deep learning models learn subtle density differences that classical algorithms could not capture. The reproducibility of CT makes it ideal for training large-scale segmentation models across institutions, especially when researchers leverage databases such as NIH imaging repositories.

Cross-Modality Learning and Multimodal Fusion

Combining information from multiple modalities enhances segmentation accuracy for complex diseases. Joint CT-MRI modeling improves tumor delineation for liver and prostate cancer. PET-MRI fusion enhances metabolic and structural interpretation in neuro-oncology. Deep learning facilitates this by learning unified representations that capture complementary information.

Cross-modality fusion can involve early fusion of raw inputs, mid-layer fusion of features, or late-stage ensemble methods. These techniques reduce ambiguity and improve sensitivity in regions where one modality is insufficient. Multimodal segmentation continues to expand as hospitals adopt hybrid scanners and more comprehensive imaging protocols.

Clinical Use Cases Enhanced by Deep Learning Segmentation

Longitudinal Tracking and Disease Progression Modeling

One of the most impactful applications of deep learning segmentation is longitudinal analysis. By segmenting structures across multiple timepoints, clinicians can quantify how tumors shrink or grow, how lesions evolve, or how degenerative diseases progress. Deep learning increases consistency, reducing biases that arise when radiologists manually segment scans months apart.

This capability is vital in oncology, neurodegeneration, musculoskeletal disease, and cardiology. It also supports large-scale observational studies, enabling researchers to model disease trajectories with greater statistical reliability. Longitudinal segmentation is becoming a core component of imaging-based biomarkers.

Automated Biomarker Extraction

Deep learning segmentation supports quantitative imaging biomarkers by enabling precise volumetric and shape-based measurements. These biomarkers can predict prognosis, treatment response, or disease subtype. For example, segmentation assists in extracting radiomics features for glioma classification or hepatic tumor aggressiveness.

Segmentation provides stable, repeatable measurements that improve downstream predictive modeling. Clinical trials increasingly use automated segmentation to standardize metrics across large patient cohorts. As biomarker research matures, segmentation will continue to underpin the accuracy of these emerging diagnostic tools.

Decision Support for Interventional and Robotic Procedures

Modern surgical and interventional systems rely on high-fidelity 3D models of anatomy. Deep learning segmentation provides these models rapidly, supporting minimally invasive procedures, robotic navigation, and real-time imaging feedback. In cardiology, segmentation aids catheter placement and guides structural heart interventions. In neurosurgery, accurate segmentation of cortical and subcortical structures reduces risk during resection or biopsy.

These applications require extremely precise boundaries, especially near critical structures. Deep learning models trained on curated datasets achieve the precision needed for safe clinical deployment. The integration of segmentation into operating rooms is expected to expand significantly as robotic systems become more autonomous. Clinical research presented through organizations like the RSNA shows that deep learning segmentation improves reproducibility in radiology workflows.

Challenges Unique to Deep Learning Segmentation

Domain Shift and Model Drift in Clinical Deployments

Deep learning segmentation models often degrade when exposed to new scanners, protocols, or patient demographics. Domain shift is unavoidable in real healthcare environments, and models must be monitored to prevent drift. Techniques such as domain adaptation, test-time augmentation, and continual learning help maintain performance over time.

Hospitals face additional challenges in identifying when retraining is required. Monitoring systems must track confidence scores, error patterns, and clinical edge cases. Without continuous oversight, even high-performing models can fail silently in production.

Distribution Robustness Across Hospitals and Devices

Clinical imaging workflows involve immense variability in acquisition settings. Segmentation models trained on a single hospital’s data rarely generalize without careful preprocessing, augmentation, and evaluation. Large multi-site datasets from sources like MICCAI challenges and Radiopaedia provide valuable diversity, but models still require domain-specific tuning.

Robust segmentation solutions must handle differences in contrast agents, slice thickness, scanner brands, and reconstruction kernels. Researchers invest significant effort in harmonizing data and designing architectures capable of adapting to heterogeneous imaging environments.

Explainability and Uncertainty Quantification for Clinical Trust

Segmentation masks must be clinically interpretable, especially when used to guide diagnosis or treatment. Deep learning decisions are inherently opaque, so researchers adopt methods such as uncertainty maps, Bayesian inference, or ensemble predictions to quantify confidence. These tools help radiologists understand which regions require manual review and which predictions can be trusted.

Explainability techniques also assist regulatory approval, as agencies require evidence that models behave consistently across populations. Transparent segmentation pipelines increase clinician trust and reduce barriers to real-world adoption.

Emerging Research Directions

Foundation Models for Medical Imaging Segmentation

The rise of foundation models trained on massive multimodal datasets is transforming medical imaging. These models learn general-purpose visual features from CT, MRI, ultrasound, and histopathology, enabling strong performance even with limited fine-tuning. Foundation models show promising generalization across rare diseases, new scanners, and multiple clinical tasks.

Researchers are exploring architectures that integrate segmentation with classification, detection, and report generation. These models may eventually serve as universal backbones for medical imaging AI, simplifying development across specialties.

Federated and Privacy-Preserving Training

Federated learning allows hospitals to collaborate on model training without sharing raw data. This preserves privacy while expanding dataset diversity, helping segmentation models generalize more effectively. Privacy-preserving strategies such as differential privacy or secure aggregation further protect sensitive information.

Federated segmentation is especially valuable for rare diseases, where no single institution has enough labeled data. Several federated research projects already demonstrate promising results in brain tumor segmentation and organ delineation.

Automated Model Adaptation in Real Clinical Settings

As deployment becomes more common, researchers are developing methods for automated adaptation. These systems monitor incoming scans, detect shifts in distribution, and adjust model parameters as needed without full retraining. Real-time adaptation helps maintain performance when hospitals upgrade scanners or adopt new protocols.

This emerging area aims to make segmentation models more resilient and reduce operational burden on clinical AI teams. Adaptive segmentation systems may eventually become standard infrastructure in radiology IT environments.

Working With Deep Learning Segmentation in Practice

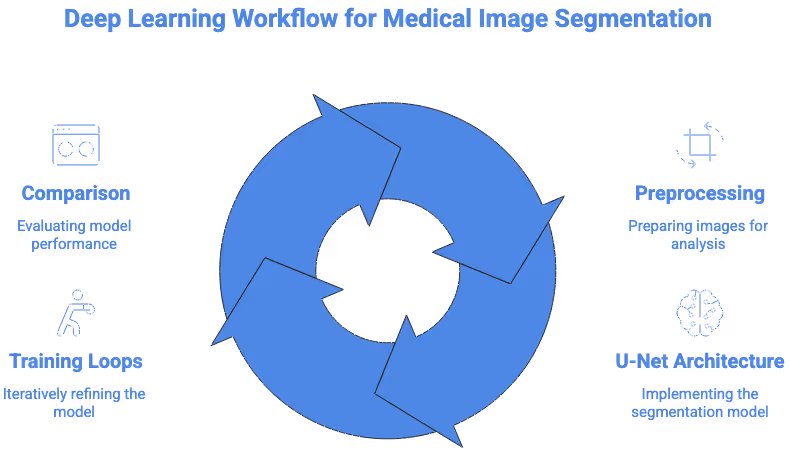

Creating High-Fidelity Ground Truth Datasets for Deep Models

Deep learning segmentation depends on high-quality ground truth labels. Radiologists, specialized annotators, and quality control reviewers collaborate to produce masks that are consistent and clinically accurate. Clear guidelines, reference atlases, and multi-reviewer workflows help reduce variability. Without rigorous labeling, even the best architectures fail to reach clinical-grade performance.

Teams often combine manual review with algorithmic assistance to accelerate production. Hybrid workflows improve consistency and reduce the burden on clinical experts while maintaining strong reliability across datasets.

Multi-Layer Quality Review for Training Stability

Quality control processes ensure segmentation masks reflect correct anatomical boundaries. Cross-review between clinicians and annotators, adjudication of disagreements, and consensus protocols help prevent noise from entering the dataset. Deep learning is sensitive to inconsistencies, so even small errors can destabilize training. QC pipelines therefore play a critical role in producing reliable models.

Clinical datasets also require metadata management, de-identification, and adherence to privacy regulations. These workflows are essential for models intended for regulatory approval or clinical use.

Preparing Datasets for Regulatory-Grade AI Workflows

Segmentation used for medical devices must meet strict quality and documentation standards. Label provenance, reviewer credentials, annotation guidelines, and QC logs must be traceable. Regulators also expect evidence that segmentation generalizes across diverse populations and imaging conditions. Deep learning pipelines that target real clinical deployment require meticulous dataset governance and versioning.

Ensuring fairness, transparency, and robustness is essential for patient safety. Segmentation datasets underpin many of the decisions that shape AI-driven diagnosis and treatment. Guidelines from organizations like NIST help ensure that segmentation models remain reliable across institutions and evolving imaging protocols.

If You Are Developing a Medical Segmentation AI System

If you are working on a clinical AI project and need high-quality segmentation, our team at DataVLab would be glad to support you. We work with medically trained annotators, rigorous multi-step quality control, and scalable production workflows tailored to medical imaging. Feel free to reach out and share your project goals. We would be happy to help you move your imaging AI forward.